Courses/Computer Science/CPSC 203/CPSC 203 Template/Lecture Template/Lecture 20

Contents

Housekeeping

- FINAL EXAM: Saturday December 13th, 12p.m--2p.m. in KN GOLD

Required Reading

"The Last Lecture: Achieving Your Childhood Dreams" by Randy Pausch is one of the most cited YouTube videos. While certainly not a "Required Reading" for this course, it is reccommended as a unique perspective on life. Enjoy!

Recap of Major Ideas from Lectures 15 - 19

In these lectures, we explore how logic can be used for problem solving; how questions in logic led directly to the invention of the computer, and how Boolean Logic Operators can be viewed from several perspectives: set-theoretically, via truth tables, via circuit diagrams, and via simple programs.

- Boolean Logic is a logical sytem where every proposition is assigned a truth value of 1 (True) or 0 (False), and Propositions can be combined via a small set of Boolean Logic Operators. The Boolean operators can be represented by Truth Tables, where for a given set of inputs, and a specific operator, there is a specific output.Truth Table (Lecture 15)

- Using Boolean Logic and Truth tables we can determine the validity of our inferences, based on a set of propositions. A propositional inference is valid IF AND ONLY IF there is no possible case where the premises are all true and the conclusion is false. (Lecture 16)

- Each Boolean operator, corresponds to a specific Digital Circuit Diagram. By "wiring together" circuits corresponding to a particular diagram, we can build machines that implement boolean logic to solve specific problems. (Lecture 17)

- A Turing machine is an idealized computer, that can embody an algorithm. The Turing machine was used to demonstrate certain questions in logic are not decideable (we can never know if they are true or false). It also led directly to the race to build a working computer. The "Von Neumann" architecture, took the Turing Machine concept and physically embodied it. Every computer is a Universal Turing Machine. (Lecture 18)

- Finally, we examine the thought process in programming computers introducing two complementary programming styles: Pseudocode Driven Programming, and the Functional Style of Programming. The small programs we develop embody the basic Boolean Logic Operators we began with in lecture 15. (Lecture 19)

Introduction

Problem solving is fundamental to computer science, and at the heart of programming. However, problem-solving occurs in every area of life -- from trying to deal with large scale national policy issues to fine-tuning your favorite recipe. Problem solving often involves certain thought patterns: Abstraction and Analogy to whittle a problem to its essence, and make connections to possible solutions.

In our last formal lecture, we look at Problem Solving in terms of Design. We briefly mention several approaches to design; introduce one common approach called "Top Down Design" which we illustrate with few examples, and finally turn towards the notion of Search Engines in terms of Top Down Design. We end with an example of arguably one of the most used algorithms today -- the Page Rank Algorithm that is the basis of Google's search engine.

At the end of this lecture you will be:

- You will be introduced to several problem solving approaches

- You will be able to apply top-down-design to a problem and create a decision tree of sub-problems.

- You will understand how a web search engine works from a Top-Down-Design perspective, hone in on how Page Rank technology works

- Finally, we will raise some questions as to how search engine technology may relate to broader social issues such as :

- economics

- privacy

- freedom

Having begun with a big-picture view of the Internet in our early lectures, we will end there with some speculations about the evolving structure of the Internet; and the effect of technology on social issues.

Glossary

New Design Terms (for problem solving)

- Prototype -- Minimal Working Model - (e.g. a prototype car has only the parts you interact with, but no working parts). Prototyping often becomes part of a 'spiral development process' where we rapidly iterate through a series of prototypes, adding new features in each round. This approach allows one to rapidly try out a number of design alternatives, without putting much effort up front.

- Top Down Design -- Breaking a problem down into nested components (see modularity). What results is usually a 'Decision Tree', which is much like the Attack Trees previously encountered.

- Modularity -- Building systems out of components that can be treated internally as 'black boxes' and connect to other components through clear interfaces. Often used to reduce complexity in a system.

- Interface -- what the user can see and interact with. Interfaces SHOULD BE easy, intuitive, versatile. Good Examples: Pencil, Doorknob. Bad Examples: Universal Remotes, Many software interfaces.

- Object Oriented Design -- Object oriented design can be considered a complement to top-down design. Essentially in object oriented design you define (a) a series of objects, called 'classes' and (b) what the objects attributes are and what they can do (their 'data' and 'methods' respectively) and finally the relationship between objects (which includes their interfaces, i.e. what each object sees of the other when it interacts). If top-down-design leads to 'tree-like-networks', oject oriented design often leads to more complex networks.

Older Problem Solving Terms from Previous Lectures

- Cause/Effect/Goals -- We have a basic model of causality where we understand that usually things that occur earlier in time, or upon which other things depend are "causes" and things that occur later in time, or are the consequences of dependencies are "effects". This basic intuitive model is often complicated by the notion of "goals" where some future outcome, causes us to take action in the present to realize the outcome.

- Conditional probability (in terms of cause/effect). The Probability of an Effect B given that a prospective cause A has already happened. Usually written as P(B/A). Conditional probability in terms of causes/effects is a method of working out the "structure" of a problem, by noting which components of a system are interdependant. Once this knowledge is available specific techniques such as Top Down Design, Object Oriented Design, Prototyping can be applied.

- Heuristics -- 'Rules of thumb; informal procedures'. Often not guaranteed a solution.

- Algorithm -- A mechanical procedure. A series of steps (usually sequential) to solve a problem capable of being run on a 'universal computer'. Note -- the idea of a Turing Machine was to create a mechanical system that could determine if any particular algorithm would ever halt or not (i.e. be able to reach a solution). This is equivalent to saying, in math, could certain theorems ever be proven true?

Concepts

- Problem Solving Thought Processes

- Problem Solving Examples

Problem Solving Thought Processes

Two key thought processes go into problem solving:

Abstraction: Whittling away details of a problem, until its basic attributes are clear. For example, a dots-and-edges mathematical model of a real world situation whittles away all the details until all that is left are the basic objects (nodes) and their relationships (edges).

Reasoning by Analogy: Making connections between an existing problem in one field and a problem in another field (which may have been already solved in the second field). Reasoning by analogy allows us to 'borrow strength' from another field, and also requires abstraction to identify similarities across fields that may appear to differ in their lower level details (e.g. Internet Security vs Biological Epidemiology; "Keystone" Websites are like "Keystone Species" in Ecology). Closely related, is the notion of metaphor, in which two disparate things are united.

A Problem Solving Example

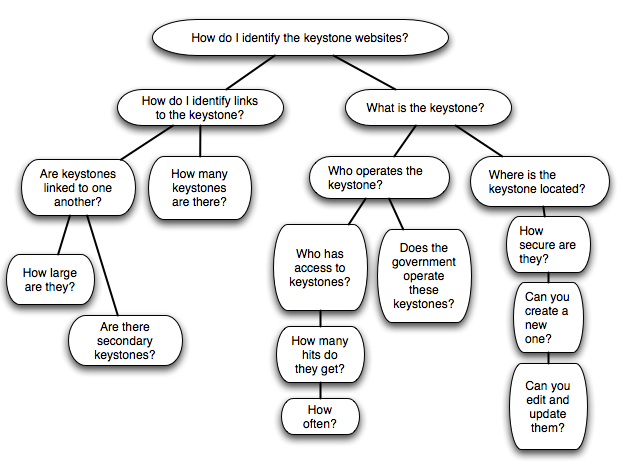

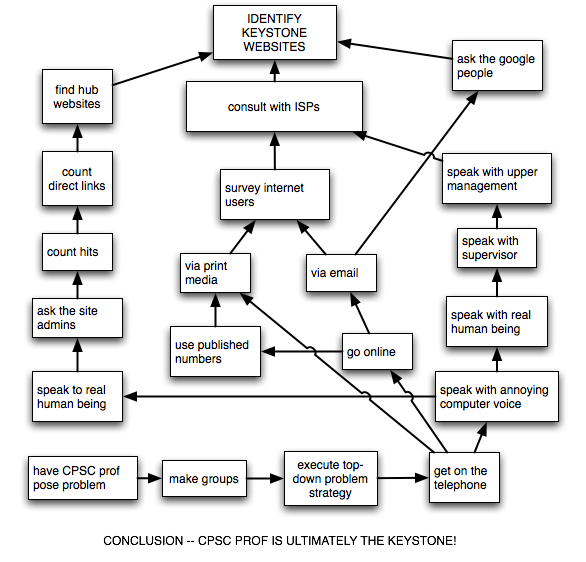

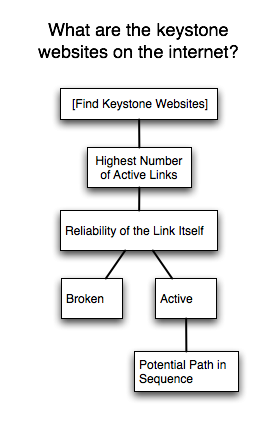

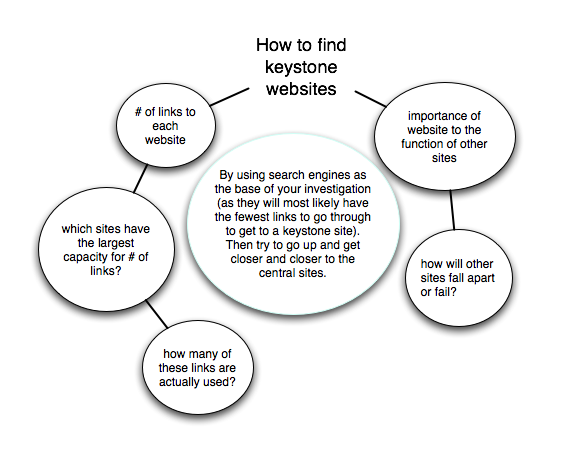

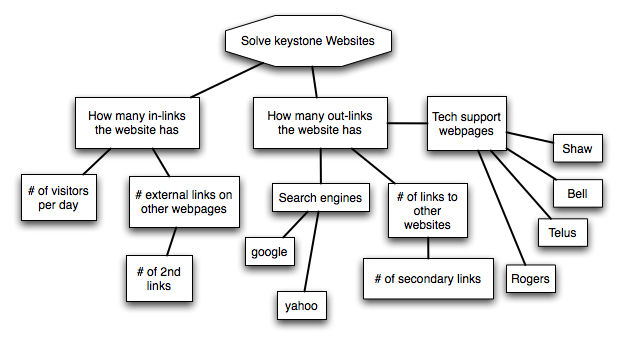

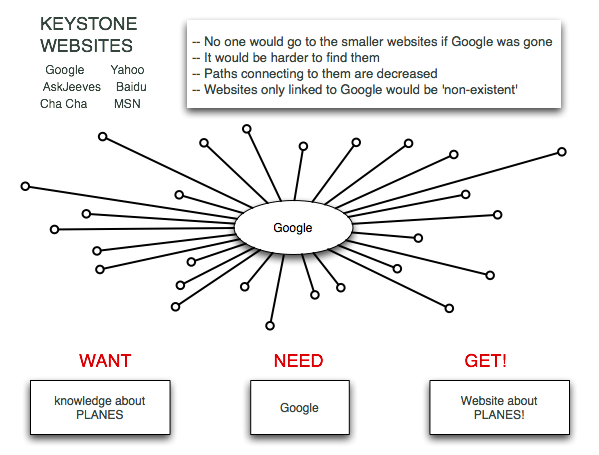

Let us use Reasoning by Analogy, and Abstraction in the context of Top Down Design to ask a particular question: What are the keystone websites of the internet?

In biology a "keystone species" are those species that hold an ecosystem together -- so if something happens to those specific species, the ecosystem cannot recover. By analogy, a keystone website would be one (or more) websites that hold the World Wide Web together.

Keystone Species Example: We will illustrate the keystone species in a simple graphic at: http://www.vtaide.com/png/foodchains.htm

then transfer our intuition over to the Internet (i.e. reason by analogy), using some examples from previous semesters.

The above example was made by Lianne Sherwin, Melanie McCann, Ryley Killam, Mina Miyashita, Amanda Jones and Colton Kent.

The above example was made by Team Turing.

How Search Engines Work

- Top Down Design Example

- Get Information ---> Spiders/Web Crawlers

- Organize Information ---> Index

- User Queries Information ---> User Interface and Query Engine

- The Spider or Web Crawler -- this is a program that "crawls" across web pages, and retrieves tag headings and text content, the basis of a database.

- Searches pages

- Follows Links

- The Index -- this is a form of "Inverted File" that takes the database, and re-represents it in terms of an index of keywords, linked to web-pages (similar to the index at the back of a text book)

- Transforms results from Spiders forays into a single index

- The Query Interface -- this is what you, the user see, when you wish to do a query, as well as what goes on "beneath the hood" to process the query.

- How to make Search Easy For Humans

- Link between questions you can ask and the Index

Page Rank

Once we have an Index, page rank could be said to be the algorithm that determines how we sort items in the Index, i.e. what to return at the top of a search list.

As a formula: PR(u) = SUM (PR(v)/L(v))

In English: The Page Rank of a page, "u" is based on summing all the Page Ranks/ # of Links from each page "v". Where for a given Page "u", "v" are all the pages that link into it.

Technical Difficulty: This algorithm is actually iterative. So, to find the Page Ranks for "v", you have to apply the algorithm to the pages that link into v, and so on. One begins by initially assigning a page rank to each page that is 1/#pages. So if your "universe" of web pages were 10, each page would be initially assigned a rank of 1/10. Then, utilizing the formula above, you calculate a first iteration page rank for each page. You repeat it with a second iteration page rank, and continue doing so until the page ranks (hopefully) stabilize. While this kind of calculation can be done by hand for a very small system, it rapidly becomes extremely computationally intensive.

For more technical details go to: http://en.wikipedia.org/wiki/PageRank

In a sense, returning to our Keystone Website example, and again reasoning by analogy -- we could say that that website(s) that has the highest page rank, is the keystone Website of the Internet.

Control Issues

The prevalence of search as a means of locating information, making purchasing decisions, finding people; it's incorporation into other technologies such as email, raises a host of social issues on which technology has effect. Here we raise some questions in terms of several areas where Search Technology raises thorny Social Issues, particularly about the degree to which control of a technology may lead to control/influence in a much broader context.

- Economics

- Are the rankings objective?

- How do paid ads show up?

- What happens if a pages rank suddenly changes due to changes in Google's algorithm?

- Click Fraud

- Link Spamming

- Privacy

- "I took it off the net, but now it's cached by Google."

- Blogs and Emails often searchable

- Moving Search Technology to the Desktop: google office, Google mail, etc.

- Mining Search Data (see Searching the Searches)

- Freedom

- Political Control of Website Search Results

- Searching the Searches

- Big Picture -- Back to Scale Free Networks

- Probability of a new site linking to existing site with many links ---> higher page rank for the exiting site.

- So .... Does Page Rank algorithm promote Internet as a Scale Free Network.

- and, do the "issues" described above affect structure of a scale free network.

I will leave you with this small list of issues and questions to ponder.

Summary

- We examined a few design issues in terms of Abstraction, Reasoning by Analogy, and a specific design technique, "Top Down Design".

- We used a specific example: What is the keystone species of the Internet to motivate our investigation.

- This lead to a top down design description of search engines, and a simplified description of Page Rank.

- Finally, we ended, with a small list of social issues that might be affected by technologies such as Page Rank.

Text Readings

Resources

- Python Programming. An Introduction to Computer Science. By John Zelle. 2004.

- Google's Page Rank and Beyond. The Science of Search Engine Rankings. By Amy N. Lnagville and Carl D. Meyer. 2006. By Princeton University Press.

- The Google Story. 2005. By David A. Vise. Delacorte Press.

- The Search. How Google and its Rivals Rewrote the Rules of Business and Transformed Our Culture. 2005. by John Batelle.Portfolio Books.

- Information Politics on the Web. 2004. By Richard Rogers.MIT Press.

Homework

(none)