Courses/Computer Science/CPSC 457.F2014/Lecture Notes/Scribe2

September 12

From Programs to Processes, Part 1

Consider a binary file as an input into the OS Process: is a program in execution, or going under execution.

Cat- concatenates files Ctrl r: reverse search for history

Command man displays the manual pages for different functions

can use readelf to go through specific parts of the program -a: prints out contents of header ELF .text: is your program code .rodata: is read only data .ctors: constructors .dtors: destructors .data - global variables wind up in here

example: DWORD PTR (ebp-0x4), 0x0 --the load value 0 into location of pointer ebp address minus 4

strace among many things gives the system calls

pstree shows how processes were created

fork is a system call that clones the current process

Spetember 15

From Programs to Processes, Part 2

Class Starter Exercise at beginning of class A) Using hexdump and the ‘mathx’ program, find: 1. entry point address 2. start of section headers 3. verify the contents at effect

ELF is a self describing file format Primary OS responsibility: -provide an execution environment for ‘user level’ software applications (ie. Programs)

src math.c -> gcc -> mathx -> OS kernel (kernels job to make it into a running program)

ctrl c: kills the program that repetitively keeps running.

ctrl c gets delivered to kernel, the kernel has to decide where to send the command, it sends it to the process that is currently running.

strace can trace the process while in execution

when it stops it moves to background. The process still does exist and is running in the background.

Ps – lists the process executing

Pstree is useful to see who is parent and who is child.

If parent gets deleted child will still exist but will have a new parent.

In order for any command to work, the OS is involved. For one process to talk to another the OS must be involved.

If OS is not involved then it can break things very easily.

September 17

Guest lecture by Prof. Williamson on some OS history and the evolution of OS software.

The OS has many broad definitions:

>OS is a communicator between the user who is inputting commands and the hardware, which is functioning based on the input given from the user.

>OS is in complete control of the program being executed.

>resource allocator: decides between conflicting requests for efficient an fair resource use

>control program: controls execution of programs to prevent errors and improper use of the computer

Key Concepts of OS kernal that apply to most OS:

-resource allocation

-accounting

-protection/security

User Support: -user interface -program execution -(I/O) -error detection and handling

**Process : a program in execution

**Address Space : portion of the memory assigned/allocated to a process(physical or virtual memory)

**Files : an abstraction of the storage data; sequence of bytes

**I/O : input to the computer or output from the computer(wide variety of devices ex. keyboard, mouse,disk, monitor)

**Protection : Hardware mechanisms to support access controls

Requirements for a good program:

Make programs efficient for the user and the the OS as well. So the user will be able to interact with the OS without having to provide too much detail to understand it. Also, the OS will be able to get the job done without taking any extra steps into account. This makes the program efficient, simple and understandable.

September 19

What is an Operating System?

OS came as a software and a design philosophy. OS major responsibility is to run a piece of code.

Have a think-pair-hear exercise; discuss these terms Kernel vs. shell vs. distro.

Interested in ELF and how to craft them, read “A Whirlwind Tour on Creating Really Teensy ELF Executables for Linux”

Side note: Upcoming sessions we will talk about SYSTEM CALLS

OS does not execute code, what executes code? The CPU itself does.

Job of OS is to get out of the way, you want it to play minimal part in executing the code.

If you are writing and operating must know how to do what you have to do and get out at the end

OS vs. Shell

-Alternate to GUI

-OS is the ‘entire’ system, and shell is part of it.

-Shell is a user level program

OS vs. OS distribution

-a version brand (distribution)

-Many distributions tweek the kernel, add useful libraries.

-OS is not useful without a distribution

OS vs. OS Kernel

-Kernel is the “brain” and OS is the “body”

-Kernel is a buffer zone

-Kernel: privileged code

OS is a pile of:

source code,

a program running,

a resource manager,

an execution target.

Privileged? Supervisor?,

userland/kernel split

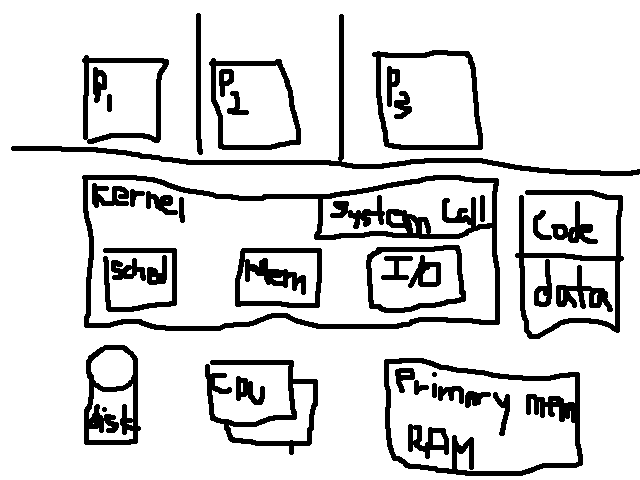

Drawing an operating system

The line in between represents a form of abstraction, it abstracts away the details of the kernel and the hardware from the user. Why? So we don’t have to worry about things such as if we are executing on an intel etc. That stuff is hardware that we don’t need to stress about every time we create a code.

Part of Kernel is the System Call interface. Pieces of kernel and hardware are inter related. Monday we will discuss the architecture of the system.

September 22

All input and output has to be assisted by the OS.

P1, p2, p3, are just a combination of code and data

Students should understand that when the CPU is in protected mode, the privilege control infrastructure and instructions are available to a piece of system software running in ring 0. Students should also understand the role that interrupts and exceptions play in managing context switching between userland and kernel space, and they should understand the role that the GDT and segment selectors play in supporting isolation between the kernel and the user space.

You need hardware support to switch from one operation to another.

If p1 is executing, how do you kick p1 off to run another function? You cant, the OS has interrupts and exception to jump from one process to another. Kernel is essentially the ELF.

CPU Mode

When you power on your piece of hardware, it first starts in its real address mode which is unprotected. Now we can move into protected mode, which allows it to force a separation between process and kernel.

System Management Mode, is almost completely separate it is a hardware management mode.

OS started out by replacing the human operator, this is one of the reasons we emphasize that OS is something that loads and executes.

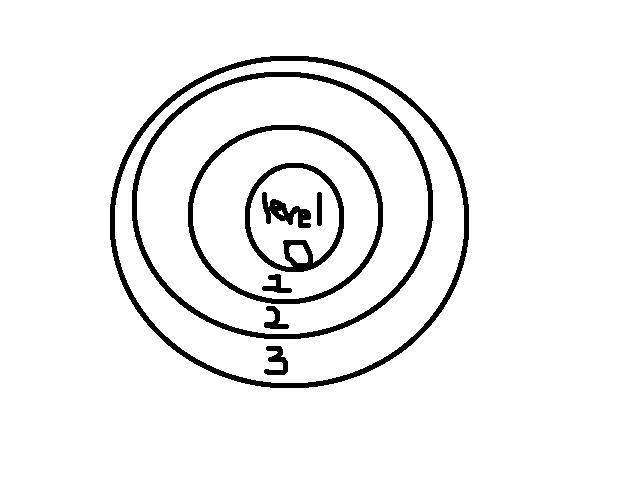

What separates user and kernel mode? CPL bits in CPU, DPL bits in segment descriptors (two bits)

Supervisor environment

CPU maintains a registry to help the supervisor mode keep track of process. GDT (global descriptor table) is part of memory to hold a physical memory address.

A segment descriptor, there are three different types. The job is to hold a metadata.

Kernel data vs. user code. What prevents user code to not write in kernel data, it is bits 13-14 DPL (descriptor privilege mode) it prevents user code to over write the kernel data.

Privilege Rings, ring 0 is the most privileged data, and level three is user level code.

Physical memory is a piece of big array. 4.3 billion memory location to place stuff into. Highest 1gb is reserved for kernel code data.

Address beginning with C or higher they are pieces of kernel code or data.

Process started with bfff… are part of stack data in the bottom addresses.

IVT register points to the GDT register. IDT (interrupt descriptors) OS dispatches events, the earliest thing the kernel does is set up the IDT. Kernel needs to jump to jump to somewhere, IDT gives a routine, IDT is a function pointer into the kernel code. IDT takes a particular vector.

September 24

System Calls

When goes into protective mode have access to rings then.

A call to a method is handled by the compiler.

Protection of the OS is enforced by CPU.

There are about 300 system calls on linux, but only a relatively small set is used frequently.

Eax holds the syscall number, which we can get from unistd.h

Ebx, ecx, edx, esi, edi hold the system call arguments

Issue an INT 0.80 instruction; this causes the CPU to generate an interrupt and trap to the OS (via the IDT). The CPU then transitions to supervisor mode, one mechanism that enforces the kernel

Cannot directly jump from user phase to kernel phase.

Segmentation fault – an illegal memory reference, cannot jump from user to kernel.

strace is useful for tracing system calls.

Gdb –q ./filename.x ← runs gdb on filename.x

Break _start ← pauses at this location in the program

SYSENTER instruction to jump into the kernel

September 26

1)Write a user level tool (itrace) uses ptrace system call. Ptrace is a system call but it allows us to break through the isolation between kernel and user space, ptrace breaks through that barrier. use x86 library to convert to machine code.

2)Remains at user level. Insert some non original code (given) into any arbitrary ELF (you are writing kind of a virus). The thing you insert must execute two system calls. These system calls can be of any kind.

3) Do not have to modify existing kernel code. Write an LKM. Has to do with process descriptor (will be discussed next week). LKM should behave like a fake device. Can find how to do a lot of this stuff online.

Object file is .o file, it is nothing more then an ELF.

Machine code, invokes system calls related to the shell. Knowing how to speak with machine you will understand how the CPU works and how it cooperates with the OS.

A CPU executing the code:

Fetch – decode – execute (code), from user addresses, does not write like a kernel address into the machine, because we are using the IDT mechanism. The fundamental reason is that we cant jump to that address. Main reason is for protection.

Fetches next couple of bytes, the int causes it to transfer the command to jump from user to kernel. Now no longer in user space. The kernel does what you ask it to do, the right system call will do what you ask it to do. Int causes an interrupt and then jumps. The cpu just has to change from user to kernel.

So fetch – decode – execute is now in kernel.

The interrupt handler is just dispatching, the kernel is responsible to transition back to user.

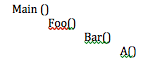

Have a process address base to keep track of this. On the stack there are a bunch of activation records. They all have return addresses, shows where to return after done. If you are building one, it is a contract between two functions, a caller and a callee.

At the end of class we went over a C code. Make sure you understand MACROS

September 29

Different ways to design OS.

Linux takes the approach of having a monolithic approach. In this approach the all the code is just one big code instead of having different branches. Monolithic kernel is very fast. Problem is a bug in the kernel will take down the whole kernel.

Microkernel is placed in many separate processes. This approach is much safer but difficult to bug due to many branches.

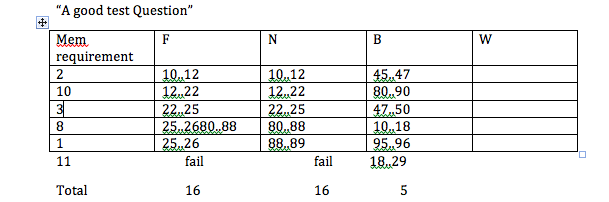

Think of a process as a virtual CPU. All processes cant use CPU as once, the OS needs to keep track of what is currently being executed. A process is a virtual CPU.

Thread vs a process, in linux there is no difference. Designer of linux did not distinguish between both. Linux uses the term lightweight process to represent a thread is a process and a process is a thread.

A process is in one sense is just a program counter, and in some sense a process is a virtual CPU.

OS keeps track of all the metadata.

When you need to keep track of lists of things, the kernel has systems embedded in it to carry the instruction. If you are waiting for something from I/O the CPU puts that procedure to sleep because it is not of use at that moment. If still waiting, it can also be sent back into a queue to be executed after other functions have executed.

October 1

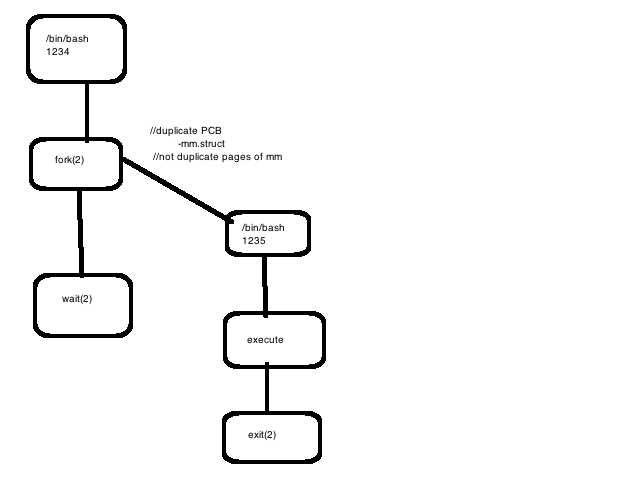

Processes come from other processes, via fork.

Process satisfy all user requests, so quick creation is important! But the fork/exec pattern implies:

3 mechanisms exist to make process creation cheap:

(1) Copy on Write

(2) lightweight processes

(3) vfork: child processes that uses parent PAS, parent blocked

In strace you can narrow down to what system calls you are interested in.

(-f ) fillow.

Clone is a general service, provides you with a thread to share on parent database.

Vfork assumes child will do something executable. (very rarely used). If vfork used wrongly it will crash the whole program.

October 3

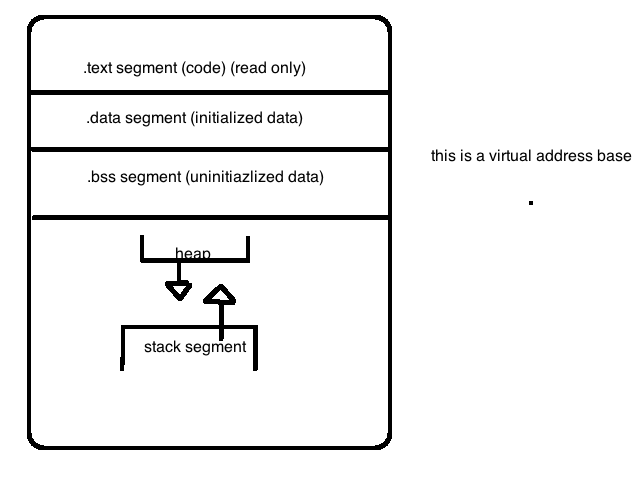

Process address space is an abstract process. Organizing and managing it is a major job of the operating system because it is extremely completed. Is a linear address base from 0 to n.

Stack keeps track of the program and the functions. Activation records get piled onto the stack when they get called.

What is the purpose of having a virtual space: if you have the ability to write into any possible address base is not a good thing, so we have virtual instead, it provides protection to give everyone virtual space instead of the physical address. It isn’t secure to provide physical memory address due to protection.

Partial sections of memory, is not a good idea either because programs require a lot of memory which ends up ruining the program due to limited memory.

Memory region: sub section, range or a piece of memory.

/proc/self/maps has six columns:

Start and end address of the memory address (32 bit address)

Second set of column read and write permissions

Third column: offset

Fourth: device where the memory region came from

Fifth: size

Sixth: library set

Fork(): create a PAS

Execve(): replace PAS contents

_exit(): destroy PAS

brk(): modify heap region size

mmap(): grow the PAS; add a new region

munmap(): shrink PAS; remove region

mprotect(): set permission

Map will grow an existing memory region or create a new one.

When process are created most likely the heap is of the same size.

October 6

translation from a logical address to a physical address. Inbetween the logical address and physical address is converted to linear or virtual address before moving to physical address.

job of mmu is to translate logial address and point it to the virtual space and translate into hardware withoin the phyiscal address.

logical address has two componemts; segment selector and the offset. logical address has an offset fixed by a ds, which is a segment register. x86 has segment registers. in ds is a segment selector, which helps to select a particular part of memory. segment selector is nothing more then an offset to the gdt.

global descriptor table has a segment descriptor.

memory address has a base address (where the segment starts), and limit (where it starts and ends), also the privildge mode is present in the base address.

second part of the logical segment has the offset which sets off to the linear adress space.

linux only has four segments.

logical address is equivalent to the linear or virtual address.

segment registers

ss for staff

cs for code

ds for data

es general purpose

fs general purpose

gs general purpose

process cant share the same place. we need different addresses. OS comes to rescue to change linear address to some different physical address.

linear adress is taken by paging and split up into three address. (dir, table, offset). in the test struct there is a data struct there is a pgd(page global directry) which gets a new physical address.

32 bit virtual address (4096)

10 10 12

Dir | page offset

Offset

0x08049858

08 04 98 58

[00001000] [00000100] [10011000] [01011000]

10[0000100000](32) 10[0001001001](73) [100001011000](2^11+2^6+2^4+8)

directory page table offset

direnctly will point us to a particular page table, page table points to a physical address which will be 73 page will give us the byte we are talking about which is the actual offset.

October 8

Kernel doesn’t keep memory processes at once. There is only a limited amount of RAM. On average NNN processes. Processes don’t know how much memory will actually need. Each believes the can at least access 4GB.

When processes allocate a lot of memory, the OS will have to find virtual addresses.

October 10

Upcoming midterm:

-Will include code

-system calls and reasons behind its behaviour

-characteristics of OS and processes

how do we map the PAS of each process into the available physical memory?

First solution: map a virtual address to physical address.

PID Virtual Address Physical Address

123 0x804abcd …

123 0xabcd …

123 0x9000001 …

123 ….. …

memory = size of entry x # of entries x # of processes

M = 2^35 x N – where N is the # of processes

Page directory - reduces the memory requirement for storing a mapping.

- Page size is fixed to compile size.

Bits: Contents of Page table Entries

20 bits: page from index

Present bit: is this logical page in a frame?

Protection bits: R/W/X (R sometimes implies X)

Dirty bit: was this page written?

Accessed bit: hardware sets this; OS must unset. Used to determine candidates for swapping

User/Supervisor: ring 3 or everything else

Virtual Memory mechanism

Path 1, process issues M; M in physical

-linear address belongs to a page that is actually in physical memory.

-Page translation takes care of identifying the appropriate physical frame. The OS is minimally involved.

Path 2, process issue M; M absent

-Linear address is not in physical memory. Page translation…

Case A: Free frame

-There is a free page frame.

-The os selects it, fetches the data from disk and writes the page into the frame, updating the indexing as needed

Case B: N free frame

-Select some frame

-Evict its content

-Write new page contents to frame

EAT = (1-p) * accesstime + p * pf overhead

P is the probability of a page fault occurring

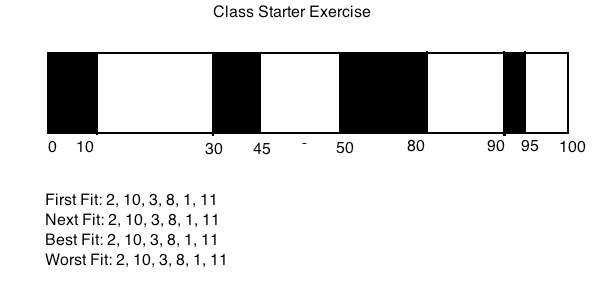

October 20

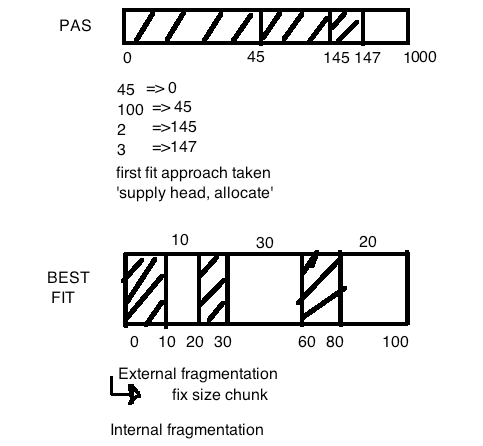

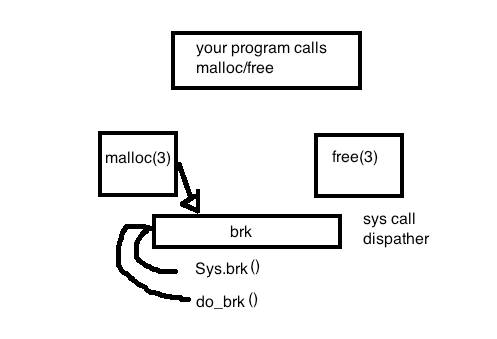

Don’t care what malloc does

Best fit doesn’t solve the problem of fragmentation

It’s okay to stead space, then you have data and meta data mixed together in one address

It’s useful to have a backwards pointer towards that address.

Hardware and OS worries about the details like DPL bits.

Learn more by reading GLibC or malloc source code, or even better, reading the GNU C Library Implementation on www.phrack.com/issues/57/9.html#article. Try it out in a program by calling malloc a few times and checking memory before and after the address.

Class question: How does this solve our fragmentation? Fixed-size pages/page chuncks solves external fragmentation. Keeping a list/lists of free blocks helps to minimize internal fragmentation, but it doesn’t really get solved completely.

Class question: How big is a chunck? Depends what you mean by chunck. 4 bytes for each field, so at least 12.

Class question: Does a break...? Break is for giving you the heap. Returns a linearly contiguous something

Class question: What happened when you ask for more memory than a page size? It has to go ask the OS. The data structure doesn’t really care about page boundaries. Refer to pictures in prof’s lecture notes.

October 22

Malloc manages its own memory

Difference between internal and external fragmentation?

External Fragmentation:

Frangmentation of physical memory is subjected to the evolving and arbitrary-sized memory requirements of multiple programs. Total free memory may be enough to satisfy the demands of new or existing programs requests for memory allocation, but is unusable because ot is non-cont..

What construct solves the issue of external fragmentation?

-paging

pages- ensure that memory allocation to processes address space occurs in predictable continues, fixed size chunks.

Internal Fragmentation - arises because of 'wasted' space inside a page; e.g. a 64 byte strutter alone on a 4kb page.

Malloc- allocates memory

Calloc- clear or sets flags

Realloc- resizing from already existing momory.

Free- pass in a value and give a previously allocated memory back

Class question: Free vs calloc: calloc is basically malloc with extra function, free allocates existing memory.

Both (mmap and brk) allow to ask for more mem from OS:

Mmap- for allocating and deallocating memory regions

Brk- increasing or decreasing a heap

Prev-size <- went over it

Size: contains the length of the current block of memory.

(01) 0 not mmapd 1 in use

size is encoded in line.

The whole internal info is held in-band – a clear channeling problem.

October 24

October 27

System Startup overview

Startup is a contract between few pieces of code. BIOS was helpful before in the age, but today it is totally useless, its job is to transfer control into next piece of code. BIOS job is to jump to bootloader. Bootloader job is to provide options to jump to. Then the bootloader exectutes the program, transfers control, finally the kernel gets the chance to learn. Now the kernel is running. Job of kernel is to forget what BIOS did because they are outdated. The OS goal is to create a working environment. OS has to establish its own code is loaded into system properly. Make sure the code is running, the code will set up and initialize. Once that is done, the core is set up, now we it can focus on creating user level processes. User level processes get created via fork.

Some of the data values are written and filled up by bootlader

Calll main //suffic notation for long call-l-

The main you can see the early stages are tightly tied to the architecture.

Go_to_protected_mode(); //last line in main

Setup_idt();

Setupgdt();

//still not in protected mode till:

protected_mode_jump();

in protected_mode_jump

//to be in protected mode is to be able to write a bit into a control register

orb $x86_ CR0_ PE, %d1

code32_start; //default position

jmp intial_code;

goes to:

.long i386_start_kernel; /this is the kernel routine

//creating independent kernel cpu in function kernel_thread(), which just calls do fork essentially.

Finally we create a new process at the end of kernel_thread.

Run_init_processs(hard codes into try executing x)

If doesn’t work then goes to function panic();

Which goes to kernel_exec(); //which loads an elf and executes it.

Etc/rc.d/init.d/ starts forking off all the processes

October 29

“Problem of scheduling”

How does OS choose which process should be given to CPU?

Scheduling is a mechanism that creates an illusion that many processes are executing at once. CPU core can ONLY do one process at a time.

Into designing a scheduling algorithm: priority, fairness, efficiency, progress, quantum, and preemption.

quantum- has direct impact on how you calculate priority and fairness

preemption - allows the OS to get involved in the scheduling

Contrast: preemption vs. cooperative multitasking(processes ceases the CPU).

processes are oblivious.

Quantum- the default amount of time given to processes to accomplish progress. Getting it right is tough, what if time is too short? if too long, concurrency disappears.

Types of Processes

Traditional classification: CPU bound and I/O bound

Alternative Classification: Interactive, Batch, and Real Time

A batch process can be I/O bound or CPU bound

Approaches to Scheduling:

we want fast response time

dont want processes not doing anything in the background

dont want unfairness over different processes

“nice” command line program adjusts process priority.

scheduling algorithm:

first come first served- jobs arrive in certain order, execute in that order. If all arrive at same time, take the lowest size process etc.

shortest job next- con: processes that are of importance and are large starve in the background.

round robin

October 31

sched.c

O(n)- it is linear

It will scan 10000 process just to see what’s next. It is relatively simple but faces scalability problems.

If you have to find the priority task, don’t you have to scan through 10000? Have a priority queue, not relevant still O(n).

What enables perception? – a reliable hardware-driven clock/timer interrupt.

Put_prev_task(rq, prev); //kicks off previous task

Next = pick_next_task(rq); //get new task, O(1) operation.

Give a consistent time slot to each process, once done place at the bottom of list, priority gets higher position etc. high priority gets CPU more often but still for the same amount of time as others.

Low priority wont get CPU as much as they should or need to.

Use trees to determine who goes next (the left most node on the tree)

Kernel maintains a very small processes kernel stack. To keep track of its own internal control flow. Holds other metadata processes.

November 3

Emerging Problems successful multi processing

-Many virtual CPUs- no inherent atomicity

-arbitrary selection of next process- unpredictable ordering of tasks

^^ creates an illusion that is potentially incorrect, because any task can be interrupted in the middle of an execution. We cannot tell which one will go first.

-We don’t have the luxury of having multiple CPU for each process, therefore we require communication(share memory) between processes who share CPU.

-Concurrency is having a CPU having multiple processes.

-many different tasks, may share a piece of code(critical section-as a code region that modifies share state).

Multiple tasks or threads entering the same critical region without some control or coordination mechanism will interleave with each other in ways that produce race conditions and incorrect output.

OS is constantly being interrupted with different calls.

In multi process we can have different threads accessing the same info.

How do we prevent concurrent access? Mutual exclusion: is a concept that states only a single task should have access to a critical region at a time; enforcing mutual exclusion helps serialize.

How do we achieve mutual exclusion? Don’t let a critical section into a thread at one time.

Critical sections- not just particular lines of codes. They come into existing when you create a second thread that accesses that section. So two threads may access the critical section concurrently.

Classic IPC problems

Producer(writing data)-Consumer

-Producing data, then buffers it then the consumer is receiving data that’s being buffered. When producer is trying to create more values, but the buffer is full? Stop producing temporarily, and wait for consumer so there is space in the buffer. What if buffer becomes empty? Wait till it fills up. How do they detect that? Classic IPC problem.

-Processes have to coordinate who uses the resources

Clone is a way to create a child thread.

X-> filed1 = 100 is not atomic: it cannot be accomplished in the scope of one machine instruction. The potential exists for the CPU do start executing this line of code, be interrupted, suspended the current process and execute another sequence of instructions that reads or writes the shared state.

Potential Solutions:

Spinlocks/busy waiting- feasible but usually wasteful because CPU is busy doing nothing. While lock- until it unsets the variable to go into critical section. Access has to be atomic. Spin locks don’t make much sense in uni process systems.

Lock variables-recursive problem.

Disabling interrupts-not trustworthy, multi-CPU system unaffected.

How long is the critical section? Can be fairly long, do you want it disabled for that long? No, concurrency loses its illusion that it creates. Don’t disable interrupts, you will lose activity.

The heart of the solution is to say that we really need an atomic instruction that the CPU understands natively. Set and test at the same time in the same clock cycle.

CMPXCHG(used for testing and modifying semaphores), need this support in CPU there is no way you will get safe concurrent execution.

TASK_LOCK doesn’t do anything in uni processor, but it does on multi processer.

Lock(m) -> mutex_lock in assembly code. Mutex is a piece of state, could be a memory location.

Unlock(m) -> mutex_unlock in assembly code.

November 5

Main ideas of this lecture:

There are 4 conditions needed to make deadlock possible (Mutual exclusion, hold & wait, no preemption, and circular wait)

Which of these do we relax and how?

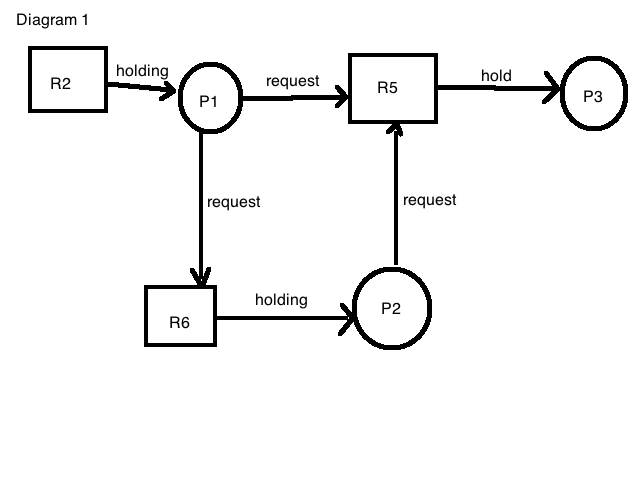

How do we see if there’s potential deadlock in a current system state? Model the situation. (diagram1)

Ie. “Process 3 is holding Resource 5”

Is deadlock possible in this situation? In this example, there is no deadlock. But if, for example, P1 was holding R5, there would be a cycle and therefore a deadlock. Note that a circle does not constitute a cycle.

Possible Exam Question: draw such a graph and answer whether deadlock is possible.

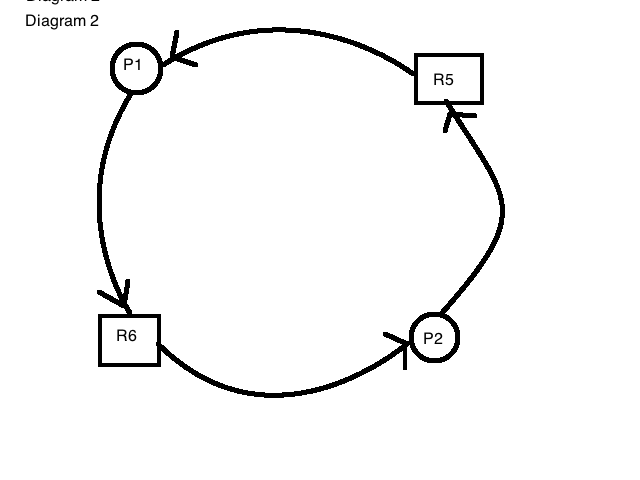

This situation represents a deadlock. (diagram2)

Now, how do you find a cycle in a linked list?

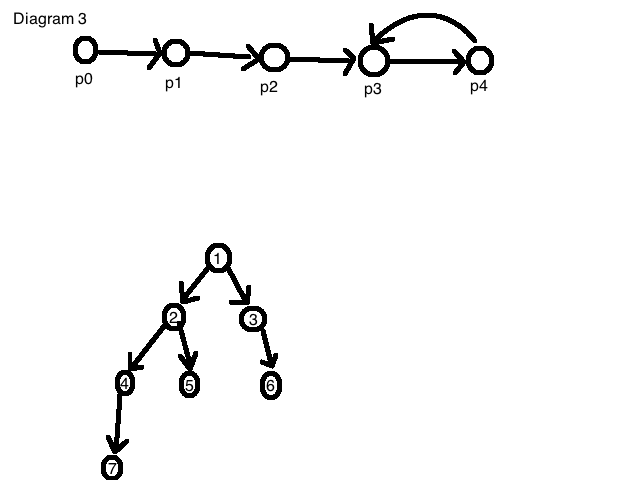

1) Go through each node and see if any other node points back to it. (diagram 3, top)

2) Depth first search example (diagram 3, bottom). Work down from list. Are there any unexplored edges? Add to list, continue. If the list already contains this element, this implies deadlock.

There are different approaches to handling deadlocks. The main approach taken in linux kernel is preventing it from happening in the first place by breaking one of the conditions.

Mutual exclusion and hold & wait and no preemption are all hard to break. So breaking the circular wait condition is the way to go, but relies on developers to do proper locking.

Coding example: We looked at tie.c, which shows how two threads attempting to modify pieces of shared state cause a deadlock. The critical pieces of shared state are box1 and box2. We have 3 threads here, the main thread and the two children. Both children acquire the 2 locks, but in reverse order. An illusion of concurrency is created, so now all 4 conditions are met. What’s the fix for this deadlock? Make consistent the order of the acquisition of the locks. This eliminates the circular waiting condition and prevents deadlock.

November 7

Kernel can preempt itself. It can interrupt a kernel thread and go off another task.

Kernel preemption I a concept in which the kernel can preempt other running kernel control path. For example a network card receives data, tells CPU its receiving data. It has to ask the kernel, CPU has to interrupt the kernel and stop what its doing to execute the routine from an SD card. It is a feature that you want.

Synchronization Primitives (good test question)

Atomic operations- certain types of machine level data that you can access in zero clock cycle. You are guaranteed safe access.

Disable interrupts

Lock memory bus (x86 lock prefix)-is a pseudo code, can lock the memory bus.

Spin locks- one thread allowed in critical section

Semaphores- not like spinlocks in the sense that the invoking process is put to sleep rather then busy waits. Kernel semaphores should only be used by functions that can safely sleep

Sequence locks

Read-copy-update

Simple asm instructions that involve 0 or 1 aligned memory access

Read-modify-update in 1 clock cycle (inc, dec)

Anything prefixed by the IA-32 ‘lock’ prefix

Count number of threads in the critical section.

November 10

READING BREAK

November 12

CLASS EXERCISE

November 14

When using semaphore do not have to worry about calling sleep()

Rest of semester we will look at few different ways processes can communicate with each other.

Signals offer a way to send short, pre-defined messages to processes. Signals are well-understood, easy to use and easy to program.

Signals can be generated by:

-the user

-another process

-the kernel

-kernel on behalf of the hardware

When you receive a signal, usually means something has gone wrong.

What should a program do after it encounters any error? It’s a specialized thing, and depends on each program and how you reference in it.

Signal s is generated.

Kernel attempts signal delivery to current process.

Else, Kernel delivers signal to process

But process may have blocked this signal

Process may also be masking this signal

November 19

Overview of File Systems

Two Abstracts

1. Perfect piece of hardware, unlimited ideal storage. Random access takes perfect amount of time.

2. Idealized notion of a file. Maybe its some collection of bytes (its some data).

Modern file systems work really hard to make you believe that you have unlimited memory.

A minimally viable file system, which we will have to minimally implement to run.

Need an ability to seek into some bytes. Random access is good, tell system what byte you are interested in.

File really is a virtual address base.

Seek() also implies, if you implement it naively (one argument) move file pointer there and retrieve the byte, will take a very long time.

Write() implies your data will land in file.

Managing data is very important, you don’t just design a system, you need to know the requirements for it.

If you are using a user level operating systems, you have to figure out what kind of file systems will be on the system. So if there are 500,000 files for example, some files are very large, and few that are small. This tells us the size and nature of the files we are dealing with. Where are these files?

Seek() on wiki. Opens a file, writes some data to that file, calls the seek system call, to go deep into the address base, one giga byte into the address base, and writes to it. You can mount a file system to a (sub)directory of another file system.

You can mount multiple file systems to a single mount point, effectively stacking multiple file systems on to one point. The previous file system is hidden (partly). Details of how this happens are beyond our current discussion.

Once you allocate the byte, the file system has to go retrieve the data, we like to treat files in fixed size blocks.

Linked list allocation: Pros: conceptually simple, a file is composed of blocks that point to each other. Con: random access is slow.

Flat inode: the collection of inodes at the beginning of the disk each…

November 21

Approach journaling: log the metadata to a fixed location, some point later on a kernel thread will come along and write to an actual location.

Purpose of journaling, is an extra bonus, keeps the data protected. File system does not corrupt.

Ext2 (compare with FAT: followed on by Ext3 which adds journaling)

-The native linux file system, linux can support any sort of file system. EXT2 is designed based out of the simple unix UFS.

-Goal: partition up some idealized storage medium to store an arbitrary number of files.

Locating blocks to disk, is the work of file systems. Consider file system as a format.

boot- machines boots up

swap- disk persistent storage.

Number of block groups- a continuous number of bytes. Block size – 4 kb

We have to store inodes, is the metadata that is associated with the file. The size depends on the definition of the inode size.

-maintains a couple of bit maps. Helps describe the free inodes and bits.

Super block – is basically the primary piece of metadata for the file system itself. Keeps track of number of inodes allocated.. helps the file system do its job.

XFS: clean-state fs design from silicon graphics

November 26

Key idea:

Map a name in a directory tree -> blocks of file content on some device.

But many devices, many approaches to mapping/indexing, and a lot of legacy data..

So: the OS kernel has to be flexible

Every process has ability to reference open files.

The open system call: pass in a string and returns the file descriptor (which is just a small integer).

When you want to do read/write system call? /home/Michael/a.txt <- turns that into a valid inode. References it to some small number like ‘4’ for example. So read this buffer in bytes.

December 1 - December 5

THIS LECTURE WILL NOT BE ON FINAL (About networking)

System calls to read/write important network state and content.

Networking isnt a specialized topic. It is a good illustration of the things we have spoken about uptill now.

Network allows the communication.

The OS responsibility of multiplexing various things is on display.

In the network realm, there is a zoo of different network devices.

OS is responsibility is as a resource manager.

The network often provides user level as an idea of a socket(weird virtual content, which doesn’t really exist, socket is the name is the bytes).

Forwarding(provides a gateway or router).

EXAM INFO

Types of Problems:

1. Process scheduling algorithms

2. Disk scheduling

3. Memory allocation

4. Concurrency, synchronization primitives, deadlock

5. page replacement

6. Bankers Algorithm

7. Process lifecycle

8. System calls vs. function calls

9. File mapping, block allocation

Concepts tested:

1. Polling vs. interrupts

2. Resource allocation, space and time multiplexing

3. Process lifecycle

4. Process creation

5. Internal and external fragmentation

6. static vs. Dynamic

7. locking vs. lock free

8. Concurrency, synchronizing

9. Critical sections

10. mapping and namespace control

11. Management data structures