Courses/Computer Science/CPSC 457.F2014/Lecture Notes/Scribe3

| Week 1 | Week 2 | Week 3 | Week 4 | Week 5 | Week 6 | Week 7 | Week 8 | Week 9 | Week 10 | Week 11 | Week 12 | Week 13 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mon | N/A | 09/15 | 09/22 | 09/29 | 10/06 | N/A | 10/20 | 10/27 | 11/03 | N/A | 11/17 | 11/24 | 12/01 |

| Wed | 09/10 | 09/17 | 09/24 | 10/01 | 10/08 | N/A | 10/22 | 10/29 | 11/05 | 11/12 | 11/19 | 11/26 | 12/03 |

| Fri | 09/12 | 09/19 | 09/26 | 10/03 | 10/10 | 10/17 | 10/24 | 10/31 | 11/07 | 11/14 | 11/21 | 11/28 | 12/05 |

Contents

- 1 About

- 2 Useful Stuff

- 3 Week 1

- 4 Week 2

- 5 Week 3

- 6 Week 4

- 7 Week 5

- 8 Week 6

- 9 Week 7

- 10 Week 8

- 11 Week 9

- 12 Week 10

- 13 Week 11

- 13.1 Nov 17: Persistent Data through Files

- 13.2 Nov 19: Overview of Filesystems

- 13.3 Nov 21: Extended Attributes of Filesytems

- 14 Week 12

- 15 Week 13

About

Hi folks, I'm James P. Sullivan (Sulley), scribe number 3. A bit about me:

- 3rd Year Undergraduate

- Concentration in Information Security

- Undergraduate researcher w/ Trustworthy Systems Group, studying security models and cybersecurity education exercises

- Current CEO of Monsters Inc.

Scare Stats

- Score: 100,021

- Scaring Techniques: Roaring

- Height: 7'6"

- Weight: 760 lbs.

Hope the notes are of use to you. Let me know if there are any changes that you think should be made to the notes (errata, etc) or if you would prefer some different format. You are free to edit these notes as you please (but do try to maintain some semblence of usefulness for everyone else).

Contact me at jfsulliv[at]ucalgary[dot]ca for comments, concerns, criticisms, compliments, etc. Beer is the standard currency of gratitude.

Useful Stuff

- http://blog.petersobot.com/pipes-and-filters is a good description of pipes and filters in the *nix shell

- http://www.muppetlabs.com/~breadbox/software/tiny/teensy.html Is a description of the ELF format by example, where they try to hand-make the smallest ELF they can

Week 1

Sept 10: Introduction

- What Problems do OS's Solve?

- Why should we learn about them?

- Practical Skills

What Problems do OS's Solve?

OS's are primarily used to solve three problems:

- To manage time and space resources

- Time is a finite resource, and must be shared between many processes

- EG: Computational time; your processor needs to be shared between many different computational tasks in an efficient way

- Space is a finite resource too

- Usually refers to _memory_ constraints (ie, the RAM that your system has avaiable to all processes) but can be other resources too

- Time is a finite resource, and must be shared between many processes

- To mediate hardware access for applications

- Applications should not have to see the gory details of the system they run on

- OS provides a pretty interface to the nasty hardware and hides some real-world complications from app developers

- Applications should not have to see the gory details of the system they run on

- To load programs and manage their execution

- Programs need to be simultaneously managed, need to communicate, and need to have distinct boundaries between one another

- Computers do many things 'at once' (though this is often just your OS convincing you of this by scheduling time/space for programs)

- Programs should have interfaces to talk to each other

- Programs should not be able to see into each other in general, they should have to use some well-defined interface

- Programs need to be simultaneously managed, need to communicate, and need to have distinct boundaries between one another

- To manage time and space resources

Why should we learn about them?

OS's are simply one of many very complex systems that we can look at. We could learn this course from Firefox, from OpenSSL, or any other sufficiently big program that does 'Operating System things'.

Understanding the computer at every layer of abstraction is absolutely essential for self-respecting programmers, even if you only work at an application layer. Having a working understanding of these many layers (and understanding how they interact) is not only useful for systems programmers, but for anyone who wants to really understand what they are making their computer do.

This class isn't _just_ about Linux, it is an example used to teach the principles of Operating Systems and there are common themes that you could take to any platform (Windows, OSX, BSD, etc).

Practical Skills

Some learning outcomes of the course that extend beyond OS principles include:

- Driving a command line

- Reading authoritative and official software documentation

- Distinguishing userland/kernel code

- Assembly patterns for system calls

- Version Control (SVN)

- Writing, loading Loadable Kernel Modules (LKMs)

- Navigating large code bases (LXR)

- Working with and compiling large code bases

Sept 12: From Programs to Processes Part 1

- Which is the program?

- Speaking ELFish

- Strace & System Calls

Helpful Commands:

man [command] : Display manual page for command ls : List current directory ls -l : Also show the file permissions file [input] : Take a guess as to the file type of input cat [input] : Concatenate the file(s)' contents to stdout (Ie print the file(s) in sequence as ASCII) hexdump -C [input] : Read the bytes of the input file; the flag '-C' also prints equivalent ASCII of each byte bless [input] : Hex editor readelf [input] : Read an ELF file (Lots of options, RTFM) objdump [input] : Dump the contents of a binary file (Lots of options, RTFM) more [input] : Read the input file one screen at a time strace [command] : Trace the system calls made by the child process that runs `command` pstree : Make a pretty family tree of the running processes

Which is the program?

Recall that last time we had a simple C program:

int main(int argc, char *argv[])

{

int i = 0;

i++ + ++i;

return i;

}

Which we compiled using the command `gcc` (specifically, `gcc math.c -o mathx`). This produced a binary file. Which is the program?

- math.c is the _source code_ of the program; this is human readable.

- But we learned that this program doesn't compile to the same thing on different platforms (and returns a different value). So this representation is useful but doesn't tell us the full story.

- mathx is the _ELF Binary_ of the program and this is what contains the x86 instructions (and plenty of other details).

- ELFs are given as input to the OS by the `execve` command; by some magic this turns a valid ELF into a running process.

(Said magic is contained in the function `load_elf_binary(struct linux_binprm *bprm)` which is in the `fs` directory of the kernel code)

- ELFs are given as input to the OS by the `execve` command; by some magic this turns a valid ELF into a running process.

- math.c is the _source code_ of the program; this is human readable.

Speaking ELFish

First thing we tried to do to read the contents of our ELF `mathx` was the `cat` command.

- This displayed a bunch of non-ASCII characters; another tool may be more suited.

We then used the `hexdump` command to display the raw hexadecimal.

- ELF files always start with `\177ELF`; if this is taken away or modified then we can no longer execute the file.

- A file containing `\177ELF` followed by random bytes is still not a valid ELF and would not execute.

Another even better tool for ELF is the `readelf` tool.

- `readelf -a` was used to show everything about the ELF.

- We can also use `readelf -x [section_name]` to look at the hex of one section.

A third option was to use `objdump` which mostly intersects with readelf.

- `objdump -d` will disassemble the ELF and see its machine instructions.

Important ELF sections include:

- .text - x86 Code (the actual program)

- .rodata - Read-Only Data

- .ctors .dtors - C++ constructors/destructors

- .data - Initialized R/W Data

- .bss - Uninitialized R/W Data

- .init .fini - Some setup and takedown (main is neither the first or last thing to execute in our program)

Strace & System Calls

We briefly looked at the `strace` command, which shows the system calls made by a child process.

System Calls are "Functions that the Kernel exports"; they are interfaces that the kernel provides to the userland. Some examples include:

- fork - Copy the process address space into a new process.

- execve - Execute a program (this can be a binary, a scripts, etc).

These two are used together to make a basic shell, which is essentially just a `while` loop that creates child processes and executes programs in them.

Week 2

Sept 15: From Programs to Processes Part 2

- The structure of an ELF

- Program control

Helpful commands:

yes [string] : Repeatedly print string to STDOUT. Useful to make a long-running process.

ps : List processes in current context.

ps ax : List processes in all contexts.

ps aux : Same as above but also show who owns each process.

grep [file] [regex] : Find matches for the given regular expression in file

strace -p PID : Attach to process PID and trace its system calls

kill -SIG PID : Send signal SIG to process PID (`man signal` for a list of signals)

man [section] [program]: Read the manual page for command in a given section (`man man` for info on sections)

: [program] need not be a command; for example, try `man fgetc` and `man elf`

The structure of an ELF

A task was given to find the following information about `mathx` using only `hexdump`.

- Starting address (Entry point)

- Start of the section header table

This is an exercise in RTFM. `man elf` will tell us the structure of the ELF header.

// Output of `man elf` on an x86_64 Linux machine (May not be identical for x86)

#define EI_NIDENT 16

typedef struct {

unsigned char e_ident[EI_NIDENT];

uint16_t e_type;

uint16_t e_machine;

uint32_t e_version;

ElfN_Addr e_entry; <---- Entry point _address_

ElfN_Off e_phoff;

ElfN_Off e_shoff; <---- Section Header Table _offset_

uint32_t e_flags;

uint16_t e_ehsize;

uint16_t e_phentsize;

uint16_t e_phnum;

uint16_t e_shentsize;

uint16_t e_shnum;

uint16_t e_shstrndx;

} ElfN_Ehdr;

We can use `objdump mathx -skip [offset]` to jump ahead to the desired byte offset or we can just count the offset manually.

The virtual address of the entry point was about 0x80480e0. This is a "typical" value for 32-bit ELFs (0x8048xyz).

Program Control

Recall that one role of the OS is to transparently provide protection to processes, and control their execution. We can interface with the OS in a few ways to control the execution of processes.

We ran `yes hello` in a terminal and "hello" was printed over and over. When Control+C (^C) was pressed, the program stopped and we were able to use the prompt again. This is because a signal was raised to make the program terminate. But we didn't send the signal directly to `yes`, we first sent an interrupt to the operating system by pressing the key, and the OS decided to terminate `yes` with a signal (SIGINT).

|======| stops o Types ^C | OS | SIGINT |=====| |< --------> | | ---------> |`yes`| \ (Interrupt) |======| |=====|

When Control+Z (^Z) was pressed instead, the program stopped and our shell (bash) told us that `yes hello` stopped. This is a similar process but a different signal (SIGSTOP).

To restart `yes`, we can run `fg` (foreground) to start the command again.

`kill` can send arbitrary signals to processes. For example, `kill -9 PID` will raise a `SIGKILL` for PID. To find the PID of an arbitrary program, the best tools are `ps` and `grep`.

ps ax | grep "yes" ---> This can be used to find the PID of all `yes` processes. ps aux | grep "james" ---> We can use this to find the PID of all processes that the user "james" owns. Note the -u flag adds in user information to processes.

An important caveat is that `kill` cannot send any signal to any process, there are some enforcements that the OS makes so that I can't send arbitrary signals to any process. If I try to send a signal to another user's process without permission, an error is given and nothing happens (hopefully).

Trying to run `kill` on such a process (PID 1 is a good candidate) will display this message:

bash: kill: (1) - Operation not permitted

Unless we have sufficient privelege (The superuser can do this freely). The picture of this is as follows.

kill returns Stays alive

|======| "kill" |======| EPERM |=======|

| kill | ---------> | OS | ---/ /---> | PID 1 |

|======| syscall |======| (Operation not |=======|

Permitted)

Note the distinction between the `kill` command and the `kill` systemcall. Typical notation for this is as follows:

- `kill` is a command

- `kill(2)` is a system call (and its man page is in section 2)

Sept 17: History of the OS

- Revisiting 'What is an OS?'

- Key Concepts of an OS

- History of the OS

Revisiting 'What is an OS?'

Every OS shares some similar features.

- Job control

- Resource Management

- Hardware control

- Providing a User Interface

- File System

- Support for peripheral devices

We can make a definition from the above, but there isn't one end-all definition of an Operating System.

- Definition 1: OS is a mediator between the user and the hardware

- The OS executes user programs transparently

- The OS tries to make the system convenient to use

- The OS tries to use hardware efficiently

- Definition 2: OS is a resource allocator and control program

- Manage physical resources (time/space)

- Arbiter for conflicting resource requests

- Controls execution of programs

- Enforces restrictions and prevents errors

- Definition 3: OS is whatever the vendor gives you

- Definition 4: OS is the program that runs first and dies last in the computer.

- The 'always running' program is the Kernel.

- Has priveleged execution not available to other programs.

- Definition 1: OS is a mediator between the user and the hardware

TL;DR: There is no one definition of the Operating System, but there are plenty of different ways to think about its roles.

Key Concepts of an OS

These are must-know concepts for this course that apply to most operating systems.

- Resource Allocation

- 'Accounting' (keeping track of processes, user actions, etc)

- Security and access control enforcement

- User Interface

- Program Execution Environment

- Support for I/O

- Abstraction layer for files (which are really just bytes arranged in particular ways)

- Communications (between processes, users, machines)

- Error detection and handling

Five topics that we will in detail cover are as follows:

- Processes

- A program in execution

- Files

- Abstraction for data storage

- Address Space

- An allocated slice of physical or virtual memory for a process

- I/O

- Devices and frameworks for interacting with the world

- Protection and Security

- Hardware mechanisms to enforce security policies

- Address translation and enforcement of address restriction (R/W rights, ownership of memory)

- Processes

History of the OS

1st Generation (40's - 50's)

This was war time for many countries. As such, computers were often used for logistics, code breaking, etc.

Computers at this point were:

- Vacuum Tube based

- Very big, very expensive (often countries had one computer, if at all)

- No OS

- Programs run in 'batch' (one at a time).

Some examples include

- ZS

- Colossus

- Mk.1

- ENIAC

2nd Generation (50's - 60's)

This was the beginning of the modern computational era.

- Transistor based

- Mainframes

- Programmed by punchcards or tapes

- Some primitive OS's emerged (usually written in assembly)

Examples:

- IBM 1401

- 7094

3rd Generation (60's - 80's)

Golden age of computing. Emergence of many things we use to this day (C, UNIX, BSD, etc)

- Integrated Circuits enabled personal computers

- Multiprogramming (doing more than one thing at once)

- Complex OS's that supported many users and many tasks

Examples:

- OS/360

- CTSS

- MULTICS (and Unix)

- BSD

4th Generation (80's - Today)

The downfall of computing, behest by GUI's and web 2.0 e-cloud-javascript-as-a-service.

- Microprocessors

- Personal Computers

- Powerful embedded devices

Examples:

- DOS

- MS-DOS

- Windows

- Linux

- OS X

Unix Philosophy (Important!)

(Note- some of this is editorialized with my own knowledge of Unix)

Unix is an extremely influential OS that is a predecessor of many (sane) modern operating systems. This does not just include Linux, but also BSD and Solaris (heavyweights in the server world), and the familiar Mac OS X.

Unix was designed concurrently with C and in a real sense the two are the same idea applied to different topics. The core philosophy of Unix is as follows:

- Programs should be small.

- Programs should be simple.

- Programs should be elegant.

- Programs should be quiet (that is, say only what they need to say, don't bog down the output with useless text).

- Programs should do one thing really, really well.

- Programs should store data in flat text files. (A universal language means that programs can work well together).

- Programs should be portable more than they are efficient.

In particular the last point is absolutely key to the success of Unix. Most OS's are very tied to the hardware they run on, but Unix is insanely portable because it is written mostly in C, which is a language that also embodies this philosopy (Write once, compile anywhere).

Most of the core programs we have seen in the terminal adhere to the above, and it makes them incredibly reliable, understandable, and composable. These programs that we have seen are generally considered 'filters' in Unix terminology, which means they take text from STDIN and spit out text to STDOUT. By definining this common interface, we can stick together Unix programs in all sorts of interesting ways, even if each program is ridiculously simple.

Take the `yes` program. By default it prints 'y' to STDOUT until it terminates. This seems silly, but for anyone who has done configuration on Unices, they know that there is often many things that you have to type 'y/n' to. Yes was designed to get around this by spamming 'y', which can be piped into the other program. For more on pipes and filters read the link at the top.

Sept 19: What is an OS? The Big Fuzzy Picture

- Recap

- OS vs Kernel vs Shell vs Distro

- Defining the Operating System

Recap

Quick points summarizing what we know.

- OS does not execute source code or programs, but it does manage their execution.

- ELF is a part of the contract between the compiler and the OS.

- We also know a bit about how these ELFs become processes.

- The job of the OS is to get out of the way, and cover the gory details.

What we want now is a formal definition of the words that we've been throwing around.

OS vs Kernel vs Shell vs Distro

We were asked three questions, which each of us tried to define on our own.

- What is the difference between an Operating System and a Shell?

- Shell is userland, OS is not. (What enforces this distinction?)

- Shell is a dispatcher, a way for the user to interact with the OS

- What is the difference between an Operating System and a Distribution?

- Two distributions of Linux may not use an identical code base, they often make modifications.

- A distribution, then, could be seen as a different 'flavor' of the same underlying OS- largely the same code, with selective modifications (where does the divide lie for this? At what point of modification is something a new Operating System?)

- Analogy was made to OS being a car, and distribution the make/model- however Dr. Locasto argued against the use of analogies in Computer Science.

- What is the difference between an Operating System and the Kernel?

- The OS is a collection of source code that manages execution, interfaces with hardware, and manages resources.

- The kernel is the live, running core of the OS, that encapuslates its main functionality.

- What is the difference between an Operating System and a Shell?

By themselves, each of these is not very useful. A kernel is just a spinning loop, an OS is a pile of code.

We can see Linux's source code as being the Operating System, divided into important subdirectories that encapsulate specific functionality.

- /arch - architecture specific code

- /block - block oriented I/O

- /crypto - cryptographic code

- /drivers - code to interact with hardware

- /fs - file system code (here's where we found the ELF loader)

- /kernel - the core of the OS

- /include - header files for macro definitions, function prototypes, etc

And plenty more.

Defining the Operating System

After all of this, can we finally offer a definition of the Operating System? What we can see is that there isn't a universally agreed on definition. Some examples of definitions are:

- A pile of source code

- A program that runs directly on the hardware (but don't all programs do this? Precision is important)

- Resource Manager

- An execution target, or an API

- A supervisor with some set of priveleges

- Everything that is not in Userland (ie, runs in priveleged mode)

As it seems, the OS performs many tasks and is implemented in many unique ways. We can start by offering a broad perspective on what the OS looks like as an abstraction.

Abstract Division between Processes

(Enforced by the OS [in particular, mem management])

====== | | ======

| P1 | | ...... | | Pn |

====== | | ======

| |

---------------------------------------------- Abstract division between Kernel and Userland

============================================== (enforced by some reality of hardware)

|The OS Kernel |System Call |

| |Interface |<---- Exported functionality of the Kernel

| -Memory Mangement ===================|

| -Scheduling |

| |

==============================================

======= ============== ==============

| CPU | |Physical | |Peripherals |

| -MMU| |Memory | |I/O |

======= ============== ==============

What we want to do is understand how these abstractions (in particular, the imaginary lines between the OS and the userland, and between processes) are implemented, and what realities enforce them (and why this is important).

We will spend a great deal of time on three particular sub-modules seen here:

- Memory Management

- Scheduler

- System Call Interface

And their implementation.

Week 3

Sept 22: Unravelling the Layers of Abstraction

We covered a great deal of content today, which at a high level started to answer these questions:

- How is the abstract division between the Kernel and the Userland enforced?

- What hardware does this rely on?

- What does the x86 instruction set provide to kernel/userland developers to help with this abstraction?

- How does a process ask the OS to do things?

- Recall that any useful work (namely, I/O) must go through the OS through some interface (System Calls).

- Processes can execute instructions but they can't tell anyone about it by themselves.

- How is the abstract division between the Kernel and the Userland enforced?

Objectives:

- Differentiate the execution environments of Kernel and Userland code.

- Understanding CPU modes (in particular, protected mode and the idea of rings)

- Understand the Interrupt Dispatch Table (IDT) which enables system calls, scheduling and context switching

- Understand the Global Descriptor Table (GDT), and what the Segments contained in it mean

Why do we need hardware support?

There are hardware faciltiies (namely, interrupt) that are extremely useful for enforcing data separation, enabling context switching, and creating an interface into the Kernel. These interrupts are asyncrhonous- that is to say that when they are triggered, the CPU immediately drops what it was doing and begins executing whatever function is pointed to by the Interrupt Vector for that type of interrupt. What if we didn't have these?

Imagine trying to schedule a multithreaded application without any interrupts. We need all of the threads to execute but we only have one CPU available for them to run on. There needs to be some clock that tells us when to switch threads and work on something else. The CPU makes this possible by having some type of asynchronous data flow that can transfer control between threads at some interval.

CPU States (in x86)

An x86 CPU can operate in one of five "modes". These modes all share some common traits, but there are some instructions or operations that can only be done from the context of some mode. This is of course essential to keep our data safe- if any process could read and write any physical memory address, or execute any priveleged instruction, then we would have no notion of protection between processes. The below picture shows the state diagram describing these modes and the conditions to transition between them.

In particular, "Protected Mode" is what the Kernel would be executing in, with access to most of the instructions. There is a "System Management Mode" also available that has access to additional features; even the OS can't know about execution at this mode (thus this is an interesting mode for rootkit developers).

Why our picture of the OS is wrong

We've constructed a beautiful abstract picture of the OS, but it is a false one. In reality, the OS is just an ELF that lives in the same memory as all of the other processes. So what enforces the "division" between the Kernel and Userland? What makes it so that the OS is more powerful? The answer is twofold.

- CPL (Current Privelege Level) bits in the CPU, DPL (Descriptor Privelege Level) bits in the Segment Descriptors.

- Virtual Address Translation.

Global Descriptor Table

The GDT is a table that contains a number of Segment Descriptors. Each entry is 64 bits of data about a Segment of memory, and there is one descriptor per memory segment.

A special register called the GDTR holds the physical address of the GDT.

Each Segment Descriptor has a has metadata regarding some range of physical memory.

- Contains the base address of the segment

- Contains the Segment Limit (ie, the size)

- Contains Descriptor Privelege Level bits at b13,14.

What are these DPL Bits? They are two bits in each Segment Descriptor that tell us what Privelege Ring the segment is in.

- Ring 0: Highest Privelege level (Operating System code/data)

- Ring 1,2: Higher Privelege level (Usually ignored, but sometimes device drivers, other low-level but untrusted code/data)

- Ring 3: Lowest Privelege level (All userland applications)

Whenever the CPU's context changes, its own CPL bits are changed to correspond to the code segment it will execute. If the CPU is executing at Ring 3, and it attempts to modify a segment marked as Ring 0, the process will terminate. Thus, these bits enforce some type of heirarchy of execution privelege. Note that the OS is responsible for setting these bits.

We only use 4 hardware-enforced segments in Linux- Namely the Kernel Code, Kernel Data, User Code, and User Data. This is the entire range of memory.

- Top 1GB is Kernel Code/Data, at Ring 0 (DPL bits 00). This is physical addresses 0xffffffff - 0xc0000000 (On 32-bit)

- Bottom 3GB is User Code/Data, at Ring 3 (DPL bits 11). This is physical addresses 0xbfffffff - 0x00000000 (On 32-bit)

There are a number of segment registers (Code Segment, Data Segment) that hold an offset into the GDTR, which describe the segments for a program. We saw these had low values (0x70, for example) in gdb, which indicates that this table might not be very big.

Interrupt Descriptor Table

We briefly talked about the IDT, which is nothing but an array of function pointers. There are a number of hardware defined interrupts that are defined for IA32, and each of these must have an associated handler in the IDT. An example is interrupt `0x80` which enables system calls as we will see. Defining this table is one of the first things an OS does when it is loaded into memory.

A special register called the IDTR holds the physical address of this table.

Sept 24: System Calls

System Calls are a contract between processes and the OS, that act as an interface into the Kernel in a safe and controlled manner.

What is calling scope?

We did a brief example in [insert OOP language here] to demonstrate a problem common to high-level and low-level applications- how do we make some code accessible, but not all of it?

In OOP, this is given by private, public or protected declarations. These define what can be accessed by whom. Of course these are just abstractions; they matter when your code is being compiled but during execution there is no magic that prevents you from accessing private members. You could write Java bytecode by hand to call any function you want, regardless of it being "unaccessible".

public class A {

private int foo() { return 1; }

}

public class B {

A a = new A();

a.foo(); // Is this legal? During compilation, no. After compilation, absolutely!

}

However, in the OS, we have some more leverage for enforcement- namely, we have the CPL/DPL bits and hardware segmentation. The CPU can enforce this separation for us, in particular by defining separate Kernel and Userland segments.

Segfaults demystified

We've all dealt with segmentation faults, but we only have a vague impression that it is a read or write from a 'bad' address. What does this mean?

We can reliably trigger this by trying to jump to an address in Kernel code, from Ring 3 (a Userland process). This will try to set the EIP to this address and a SIGSEGV (Segmentation Violation) is raised because the segmentation is violated when the CPU is in Ring 3 and tries to access a segment marked as Ring 0.

;; This will assemble without complaints, but will crash on execution. xor eax, eax inc eax inc eax inc eax inc eax call 0xc_______ ;; can we call an address in the Kernel? NOPE! SIGSEGV raised.

However this seems to be a problem. If we don't have access to Kernel functions, how do we do anything useful? We need to use the OS to do almost anything useful (namely, IO, mem management, etc). So we need access. This is the function of system calls.

System Calls are not Functions

If we want to call a System call (write(2), for example), the man page will tell us that we should write this:

write(fd, buf, num)

Which looks just like a function call... This is deceiving! To further the confusion, consider this program:

#include <stdio.h> // For write(2)

#include <stdlib.h>

int foo(int a, int b) { return a+b; }

int main(void) {

foo(2,1); // Function call!

write(1, "Test\n", 5); // System call?

return 0;

}

When we compile this with `gcc -o prog prog.c`, and check the assembly, we will see...

40051e: be 01 00 00 00 mov esi,0x1 400523: bf 02 00 00 00 mov edi,0x2 400528: e8 d9 ff ff ff call 400506 <foo>

Which is the call to foo. However, we also see this!

400532: be d4 05 40 00 mov esi,0x4005d4 400537: bf 01 00 00 00 mov edi,0x1 40053c: b8 00 00 00 00 mov eax,0x0 400541: e8 9a fe ff ff call 4003e0 <write@plt>

So is write a function? Sort of. write@plt is a function in the standard libraries (glibc) that is a wrapper for system calls. I'm sure there is a good reason for this but I don't know it.

What does a real system call look like, then? It's similar but there are two difference.

- We use registers EAX,EBX,ECX,EDX,ESI,EDI for arguments, not the stack.

- We don't use `call addr`, we use `int 0x80` (raise an interrupt and transfer control to handler 128 in the IDT).

.section .data msg: db "Test\n",0x00 .section .text mov eax, 4 // sys_write number mov ebx, 1 // STDOUT file descriptor mov ecx, msg // First character in a buffer containing our message mov edx, 5 // Bytes to write from this buffer int 0x80

Interrupts

So what does this `int` instruction do? When `int` is executed, the CPU will stop executing the process that made this instruction, and transfer control to the entry in the IDT corresponding to the argument to `int`. `int 0x80` will become very familiar to us, as this dispatches the system call dispatch handler.

When this happens, the CPU is able to transition from Ring 3 to Ring 0 and start executing Kernel code. This is how system calls can be called from Userland.

You can see the implementation of sysenter_call in the kernel at linux/arch/x86/kernel/entry_32.S, line 446.

There is also another way to transfer control to a system call, which Intel added to account for the slowness of the interrupt method; this is the SYSENTER instruction (but int 0x80 is probably more common to see).

Sept 26: System Calls - Part 2

Assignment 1 Tips

Dr. Locasto gave some tips for the assignment at the start of the class.

- Question 1

- Know how to use ptrace(2)

- You don't have to write a disassembler- use an existing one (udis86 is recommended)

- Question 2

- Check out objcopy(1), which does a similar job- look at what is modifies in the ELF to give you an idea of what your solution needs

- The code that you're injecting is cookie-cutter shellcode (a common exploit payload), which there is plenty of good literature on

- Question 3

- Understand the paradigms in LKM code

- Look at how other pseudo-devices are implemented to get an idea of what yours should do

- Don't re-implement CRC, this is existing kernel functionality

- Make sure the pseudo-device is not writable

- Question 1

x86 Function Calls

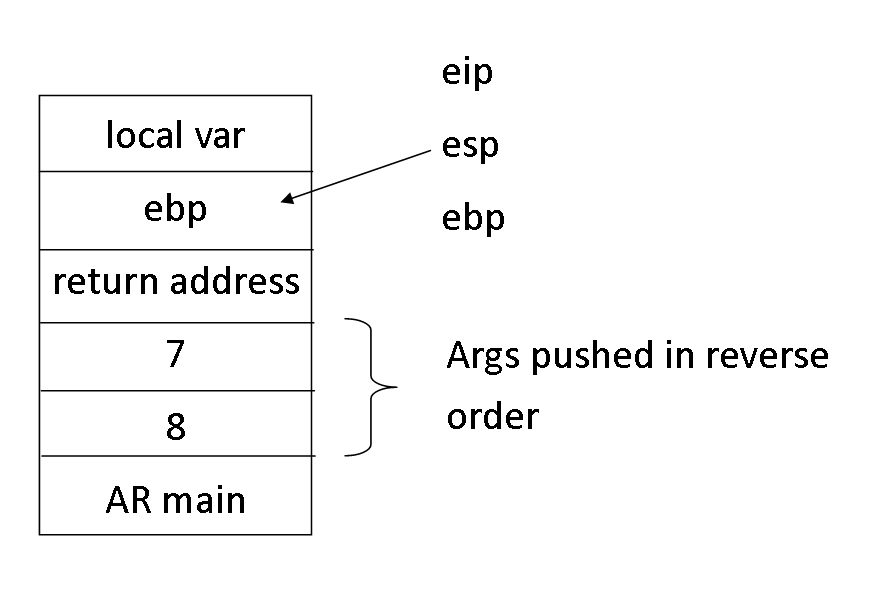

Recall that function calls and system calls look very similar in source code, but are implemented very differently. Namely, function calls push arguments on the stack (in reverse order) and then directly transfer control to the target function, while system calls push arguments to registers and use an interrupt to transfer control to the system call dispatcher in the IDT.

What is the call convention in x86? It is a contract between functions to maintain an Activation Record at each function jump, so that we always know how to get back to the top function (conceptually, the main function).

Activation Records consist of the following:

======== TOS ^ | EBP | <- Contains address of the saved EBP in the _previous_ AR ======== | Ret | <- Contains the return address (ie, the next instr. after 'call' in the caller) ======== | Args | <- Arguments provided by the caller ======== | ... | Previous Frames

Since each saved EBP points to the address of the last saved EBP, this is basically a linked list of Activation Records.

The contract between the caller and the callee is as follows.

- The caller will push the arguments in reverse order.

- The caller will push the return address when `call` is executed (implicitly)

- The callee will immediately push the old EBP onto the stack, and then set EBP and ESP to point to this stack entry

- The callee will subtract some number of bytes from ESP to make room for local variables

Note that this is exactly what control transfer looks like within the Kernel and within userland- it's only when crossing the boundary that system calls are necessary.

The Other Side of System Calls

We know how to make system calls, but what do they look like? How are they implemented? We got a small taste of kernel code by examining two system calls.

getpid(2) : Returns the PID of the calling process. write(2) : Writes some bytes to an open (writable) file.

When we examine the implementation, we see a common definition of `SYSCALL_DEFINEx(name)`, where x is a number (0 and 3 respectively in this case) and name is the system call name. This is not a function! This is a preprocessor macro that will eventually be expanded to a proper function definition.

SYSCALL_DEFINE0(getpid)

---> Expands to a nice function definition of sys_getpid()

SYSCALL_DEFINE3(write, unsigned int, fd, const char __user *, buf, size_t, count)

---> Expands to a nice function definition sys_write(unsigned int fd, const char __user *buf, size_t count)

SYSCALL_DEFINEx(name) defines a system call with x arguments by the name of `sys_name`. So if you want to find a system call definition, search for this string (the name will give you many, many hits).

getpid(2)

This is an incredibly simple function that starts to demonstrate the kernel convention- do a bit of work and pass it off to a lower layer.

SYSCALL_DEFINE0(getpid)

{

return task_tgid_vnr(current);

}

That's literally the whole thing. We can dive down the rabbit hole by checking out `task_tgid_vnr` but it won't go very deep.

Note that `current` is ANOTHER macro. This one gives you access to information about the current thread of execution (which conveniently contains a PID, namely whoever in userland called getpid).

write(2)

A more involved example is write, but it's still not very exotic.

SYSCALL_DEFINE3(write, unsigned int, fd, const char __user *, buf,

size_t, count)

{

struct fd f = fdget_pos(fd);

ssize_t ret = -EBADF;

if (f.file) {

loff_t pos = file_pos_read(f.file);

ret = vfs_write(f.file, buf, count, &pos);

if (ret >= 0)

file_pos_write(f.file, pos);

fdput_pos(f);

}

return ret;

}

Once again, the code is simple but it goes quite deep, possibly 4 or 5 layers down. At each layer, a very small amount of work is done.

Week 4

Sept 29: Processes

Quick recap on Intra-kernel calling conventions

At the end of the last class, Dr. Locasto briefly answered a question regarding the calling convention in the Kernel- as it turns out, this is identical to the function calling convention at userland. This implies that the Kernel is one single program- often called a Monolithic Kernel. An alternative is a Microkernel, where there are a number of running pieces of a kernel, and they can only communicate via special channels (similar to how userland must use the system call interface to talk to the kernel).

Key differences:

- Monolithic Kernels are faster than Microkernels (A jmp is far less costly than a full context switch)

- Monolithic Kernels are simpler than Microkernels (Less book-keeping)

- Monolithic Kernels are harder to secure than Microkernels (Any introduced code has full access to the kernel)

Linux is a Monolithic Kernel, Minix is an example of a Microkernel.

Aside: Shellshock

Shellshock is an interesting bug in `bash`, that boils down to a simple parsing error. Bash allows the user to export environment variables and functions, which are kept in a key/value table (easily seen with `env`).

However, a bug in the parsing of these will cause something like this:

env x='() { :;}; evil commands' bash -c "good commands"

To be interpreted past the `}` function delimiter that should end the anonymous function. The result is that the bits after are interpreted when the bash shell starts up, so `evil commands` gets executed.

Defining a Process

The process abstraction is a way in which the Kernel exports a virtual view of the CPU. In a real sense, every process thinks that it has its own computer that it runs on. This is maintained by memory management and scheduling, mainly.

Processes vs Threads vs Tasks

What's the difference between processes, threads, and tasks? The answer is nothing- Linux treats them as one entity, namely a lightweight process.

These can share address spaces, so can act as threads, and they can have their own, acting as processes. The three terms are basically interchangeable.

Definition

A process is all of the following:

- A program in execution

- A set of stored virtual Instruction Pointers

- A set of stored virtual registers

- A set of resources, metadata, pending events

- A process address space

These are all things that Linux must keep track of for every process.

The kernel defines processes in the `task_struct` struct, which is ~200-300 lines of code in /include/linux/sched.h at L1215. Though there's a lot of stuff in this definition, we can see some familiar things already.

volatile long state; /* -1 unrunnable, 0 runnable, >0 stopped */

Refers to some metadata about the process's state. Note that the `volatile` keyword in C will ask the compiler to try and keep this variable in a register, often because it changes frequently.

struct plist_node pushable_tasks; struct rb_node pushable_dl_tasks;

A doubly linked list of some other tasks.

struct mm_struct *mm, *active_mm;

Some type of memory management struct.

unsigned int personality;

The flavour of a program (separates interpreters, shell scripts, JVMs, etc).

There's lots of other things in there, we were encouraged to take a look.

Process States

A process can be in a few different states, which the scheduler shifts processes between.

- TASK_RUNNING : Currently on the CPU and executing.

- TASK_INTERRUPTIBLE : Waiting for something, asleep (ie, waiting for IO)

- TASK_UNINTERRUPTIBLE : Asleep, but not interruptible

- misc : traced, stopped, zombie

When the state diagram that Dr. Locasto drew is available, I will post it here.

Oct 1: Processes, Part 2

- Where do processes come from?

- What is an efficient way to make processes?

- Useful Strace flags

- COW - Copy on Write

- Child-Parent communication

Useful strace flags -e "trace=[string]" : specify the type of calls to examine -i : print the EIP at each call -f : follow the parent and child, print PID -o : write to a file

These help us narrow down the output of strace to something more readable.

Where do processes come from?

Recall that we spent some time looking at the fork(2) system call, which (apparently) copies the entire process into a new child process. This seems to have two issues.

- How is it done?

- Is it efficient?

The answer to the first one is... Further layers of indirection. fork(2) actually calls clone(2), another kernel system call that can do everything fork can and more. In particular, there are flags for clone(2) that can ask the OS to make a full copy (new process), or to share an address space (threads), or whatever else we may want.

fork(2) vs clone(2)

As it turns out, fork does nothing but call clone, which is another system call that is more featured and complete than fork is.

fork(3) <--- Library Level

|

v

clone(2) <--- System Call

|

v

-------- KERNEL

do_fork()

|

v

copy_process() <- creates new task struct

Efficienct process creation and COW

Copying the entire process seems costly. In particular, we consider the cost of the new Process Address Space. the naive option is to copy the entire thing, but this is both expensive in space and in time. The solution is COW- Copy on Write.

Copy on Write is a mechanism that the Kernel uses to make this procedure much faster. The idea is that until the child or the parent writes to memory, there is no need to give it a new address space- it can just share the parent's. However, as soon as a write happens, we have a conflict of state between the two processes. What happens now is that a new copy of that memory page (about 4k of physical memory) get copied, and the the child gets this new page instead.

As to when and how this is triggered, the answer is that clone marks pages with a special flag that causes a system trap when they are written to. This way, when a write happens, the OS is notified and it can safely copy the page.

Parent-Child Communication

We ended with a question as to how parents and children communicate. The answer is not through interrupts and traps, but rather through a variety of other IPC mechanisms, like signals, or shared memory.

Oct 3: The Process Address Space

- What is the PAS

- What is it's purpose and job

- A glimpse into paging and segmentation

- Examining a PAS

- PAS operations

What is the PAS

A process address space is an abstraction to map virtual memory locations to physical addresses. A process is to the CPU what the PAS is to physical memory- an abstraction layer to make things easier and to give the illusion that a given process owns the entire computer.

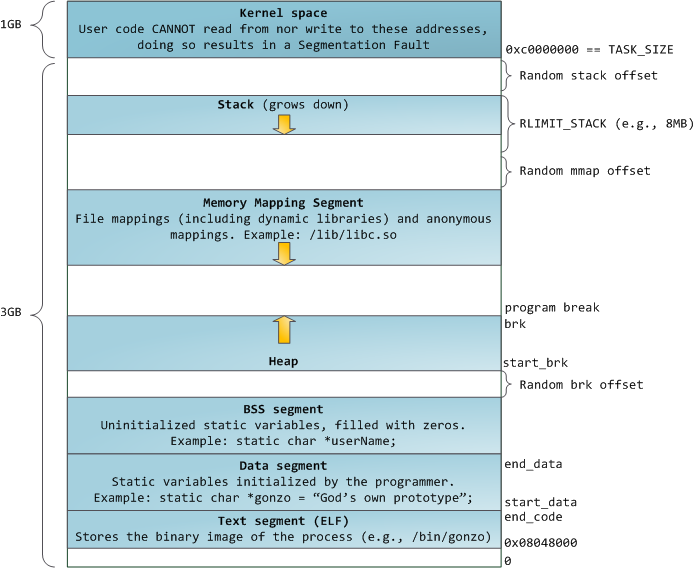

At a high Level, the PAS looks something like this:[1]

which seems to give each process the illusion that it owns (most) of this 4gb chunk. Of course, this isn't possible since we have so many processes running, and RAM is expensive.

The solution is a two-step translation from Logical to Linear (Virtual) to Physical memory addresses.

Logical Addresses ----Segmentation-----> Linear Addresses -----Paging-----> Physical Memory

The last step (paging) is what we are particularly interested in for the PAS.

Paging and Segmentation

We've previously seen that Linux uses some hardware segmentation to separate user and kernel code/data, but we haven't yet seen paging. Paging is a mechanism to take small chunks of physical memory (typically 4k-8k) called page frames, and allocate them as needed to different processes while maintaining the illusion that the process has a contiguous 3GB of memory.

- Physical memory organized into Page Frames

- PAS is a set of pages

- Page is a fixed size (4k-8k) chunk of bytes

Examining a PAS

We can look into a PAS in a few ways. One good way is the /proc/ virtual file system, which is a directory that is basically just a collection of process metadata. Each PID has a `directory` in this VFS, and we can examine processes in this way. A particular directory called `self` is a macro that will provide information about the process that opens it.

cat /proc/self/maps : Show the process address space for _this_ cat process. cat /proc/12345/maps : Show the PAS for PID 12345 if it exists.

The output of this usually looks like this (note- executed on x86_64 machine):

00400000-0040c000 r-xp 00000000 08:01 666171 /usr/bin/cat 0060b000-0060c000 r--p 0000b000 08:01 666171 /usr/bin/cat 0060c000-0060d000 rw-p 0000c000 08:01 666171 /usr/bin/cat 01079000-0109a000 rw-p 00000000 00:00 0 [heap] 7fc7729e5000-7fc772c9f000 r--p 00000000 08:01 712316 /usr/lib/locale/locale-archive 7fc772c9f000-7fc772e38000 r-xp 00000000 08:01 658669 /usr/lib/libc-2.20.so 7fc772e38000-7fc773038000 ---p 00199000 08:01 658669 /usr/lib/libc-2.20.so 7fc773038000-7fc77303c000 r--p 00199000 08:01 658669 /usr/lib/libc-2.20.so 7fc77303c000-7fc77303e000 rw-p 0019d000 08:01 658669 /usr/lib/libc-2.20.so 7fc77303e000-7fc773042000 rw-p 00000000 00:00 0 7fc773042000-7fc773064000 r-xp 00000000 08:01 658641 /usr/lib/ld-2.20.so 7fc773230000-7fc773233000 rw-p 00000000 00:00 0 7fc773241000-7fc773263000 rw-p 00000000 00:00 0 7fc773263000-7fc773264000 r--p 00021000 08:01 658641 /usr/lib/ld-2.20.so 7fc773264000-7fc773265000 rw-p 00022000 08:01 658641 /usr/lib/ld-2.20.so 7fc773265000-7fc773266000 rw-p 00000000 00:00 0 7fff861b2000-7fff861d3000 rw-p 00000000 00:00 0 [stack] 7fff861fc000-7fff861fe000 r-xp 00000000 00:00 0 [vdso] 7fff861fe000-7fff86200000 r--p 00000000 00:00 0 [vvar] ffffffffff600000-ffffffffff601000 r-xp 00000000 00:00 0 [vsyscall]

To dissect a single line of this:

7fc772c9f000-7fc772e38000 r-xp 00000000 08:01 658669 /usr/lib/libc-2.20.so | 1 | |2 | | 3 | | 4 | | 5 | | 6 |

1: Virtual Address range; the memory this section occupies in the PAS

2: Permissions (read, write, execute, private/shared [p/s])

3: Offset where we took this from (this came from a shared object file, ie an ELF)

4: Major and Minor device number where the file came from (again, the ELF)

5: Size of the memory region (bytes)

6: Name associated with the region (in this case, this is a bit of the standard C library)

No name regions are called Anonymous regions.

This is a small program- some will have much more to read.

PAS Operations

The PAS is manipulated and examined with a variety of system calls.

fork(2) : Create a PAS clone(2) : "" _exit() : Destroy a PAS brk/sbrk(2) : Modify the heap size (the program break) or get its current size mmap(2) : Grow PAS, add a new region munmap(2) : Shrink PAS, remove a region mprotect(2) : Set region permissions

Week 5

Oct 6: Memory Address Translation

- How do we allow the illusion of process address spaces?

- What process translates virtual (linear) addresses that we use in code to actual memory locations?

- What needs to be given for this to happen?

The MMU

The Memory Management Unit is another case of the tight cooperation between the OS and the hardware. The MMU is designed to quickly perform simple algorithms to translate memory addresses between three different states, alongside and transparently to the CPU's execution.

The OS helps the MMU do this work with a set of data structures related to paging and segmentation.

There are three states for memory addresses in Linux.

- Logical Addresses- Hold a segment selector (ie, an index of the GDT) and an offset into the entry. These are basically unused in Linux since it uses 4 flat overlapping segments.

ds:0x8048958 ---> Using the selector given in the `ds` register and the offset `0x8049858`

- Linear/Virtual Addresses- Holds an index into a Page Directory, an index into the Page Table, and an offset into a Page. There is some extra metadata that is needed, in particular the pgd (Page Global Directory) for the process.

- Physical Addresses- Real addresses in physical primary storage. We don't usually see these addresses, nor are they very useful at the application level (embedded systems notwithstanding).

Segmentation

The first step in the translation from Logical Addresses to Linear/Virtual Addresses is by Segmentation. However, this step is largely skipped in Linux, since Linux only has 4 segments that overlap. However, this is still useful to know about.

- The given segment selector is held in a segment register, such as `ds` or `cs`.

- Essentially, an entry into the GDT.

- The register also often holds some cached descriptors for optimization.

- The value provided is the offset into the particular segment.

- The given segment selector is held in a segment register, such as `ds` or `cs`.

The only real use of segmentation in Linux is to enforce the OS/Userland separation by use of the DPL bits for the segments.

Paging

The second step is to convert a Linear/Virtual Address to a Physical Address by Paging. Paging is performed by the MMU, and there are a number of OS-provided data structures to make this possible.

- The Page Directory holds a list of entries in the Page Table. Each process has its own Directory, which it knows by its `pgd` (page global directory) in its mm struct.

- The Page Table holds a list of pages in the physical space. A page, we recall, is a fixed-size region of memory. By necessity, the page table should have enough entries to hold the entirety of the address space in pages.

- The Page Frames are a list of chunks of physical memory (in Linux and x86, they are 4k regions).

What is vital to keep in mind is that *a Linear/Virtual Address is not an address*. It is instead a collection of bytes that keeps track of three pieces of data.

- Which page directory entry (ie, which page table entry) to look into

- Which page frame (ie, which page table entry) to look into

- How far into the page frame to look

There are many ways we can divide a 32 bit linear address between these three indices. A common way is as follows:

==========================================

| 10b | 10b | 12b |

==========================================

P. Dir P. Tab Page Offset

This is useful for a few reasons.

- 2^12 = 4096 = 4KB page size

- 2^10 = 1024; and 1024*4KB = 4GB = addressible memory (on x86)

- The rest is given for the P.Dir entry.

Other schemes may exist; for example we could just use a page table and give 22 bytes to the page offset- implying page sizes of 4MB. However, this would come at a cost; for example, when a process is forked and writes to a common page, it must be copied- 4MB extra, rather than 4KB. Essentially, larger page sizes reduce locality.

Oct 8: Virtual Memory

Virtual Memory serves to address three key concerns.

- Want to keep process data isolated (and user/kernel data, too)

- Want to disassociate variables from their location in memory (programmer should not need or use absolute addresses in phy. memory)

- We may need to use more memory than the system has available.

Fitting Processes into Memory

How do we keep all processes in memory? This seems impossible. They can all have up to 4GB of data, so we would need massive amounts of RAM available to us for this. The answer is that we don't.

Issues

- Limited RAM

- Many processes (average, >100)

- Programs are greedy and badly designed

- Each program needs to believe it has 4GB available to it

- Data fragmentation- we want it to seem like pages are all contiguous

- Dynamic allocation- how do we handle the growing and shrinking heap?

- We want to keep things as contiguous as possible.

The Memory Heirarchy

As we have seen in past CPSC courses, there is a rough heirarchy of data storage devices available to us.

speed cost

^ ^

Registers | | |

CPU Cache | | |

Prim. Memory | | |

Sec. Mem (SSD) | | |

Sec. Mem (Disk) | | |

Optical Disk | | |

Network Storage | | |

v

capacity

Ideally we want as much data to be stored at the top, but this is infeasible since there's a limited amount of storage up there. We can create the illusion that data lives at the best possible place (ie, in a register) when it may in fact be stored in some slower medium, and use some clever strategies to keep data that is going to be accessed more higher than other data.

Swapping

Swapping is a solution to this heirarchy problem. The basic idea of swapping is that we move regions of memory up and down the heirarchy as they are needed. Data which is being used right now, or will be soon, is kept at the top in the registers and caches. Data which may not be used for some time can be kept in secondary storage, or even in some other external medium.

Old way: Swap entire processes' pages in when the context changes. This works, but it sucks. It's slow and expensive.

Better-ish way: Split program into smaller units (overlays), each of which points to the next overlay, and swap only these bits (more locality). This is faster, but then the userland programmer has to deal with these overlays and control transfers- also no good.

The real way: Maintain a set of pages being used, called the "working set", and swap pages in and out as needed.

Dr. Locasto will post pictures of this with his set of slides for today. The important takeaway of the swapping process is that the OS needs to jump in only when a page that is not in the working set is referenced; this is when the OS must swap in that page. Otherwise the MMU can simply translate the addresses (this is much faster, of course). How often do these page faults happen? An answer will be given another day.

Page Table Entries

How big is a page table entry? They're 32 bits (one word on x86), but we don't actually need all 32 for page referencing (since we only have 2^10 pages available in 4GB of memory). We only need 2^20 bits to reference every page frame. The other 12 bits are used for important metadata.

Page Table Entry 12 bits : Page Frame Index 'Present' bit : Is the logical page in a frame? Prot. bits : R/W/X (Read sometimes implies Executable) Dirty : Has the page been written to? Accessed : Hardware sets this, OS unsets it- used to find swap candidates User/Super : Ring 3 vs Ring 0 data

Oct 10: Virtual Memory Recap

Today was mostly a slower look at the contents of previous lectures.

Motivation: why paging?

Consider the most basic alternative to this complex method, a simple 1-1 map of addresses in phy memory to linear addresses.

- 2^32 entries

- Each being 2^3 or more bytes (at least 4 bytes for each address, though we will probably want more for metadata)

- Table size = 2^35 bytes

- Memory size = 2^32 bytes

Just storing the table (and not even the values) would take up more memory than we have available to us. Clearly this method isn't useful. This is exactly why we have paging, it reduces the memory cost while still allowing full access to the phy memory.

Paging is an efficient means to address all of physical memory.

Virtual Memory Mechanism

When a memory access is made by a process, there are two cases.

- 1 Process issues a memory access, and the relevant page is present (in a page frame on physical memory).

- Linear Address can just be mapped to a present page

- Page Translation done almost entirely by the MMU and requires very little OS support

- 2 Process issues a memory access, and the relevant page is ``not`` present

- A page fault will be raised since the page is not present

- The OS has to service this page fault with a handler. Linear address put into cr2, and the OS tries to find the page in the swap.

- If the page is invalid, SIGSEGV.

- The OS then has to pick a destination frame.

- If there's a free page frame, pick it and write to it

- If not, then we need to find a candidate to be swapped out, evict the candidate to disk and write our page in.

The second is clearly much slower and more costly than the first, and so avoiding page faults is extremely important for efficiency. This is handled by some paging algorithms.

Cost of page faults: Effective Address Time

The EAT is a metric that can be computed to give the average-case cost of memory access.

Let p = probability of a page fault (~0.001 average)

Let n = time for a normal memory access (~1e-9)

Let o = time overhead for servicing a page fault (~1e-3)

EAT = n * (1 - p) + p * o

Note that the overhead is orders of magnitude greater in cost than a normal page lookup.

Week 6

Oct 17: Page Replacement

Why do we care about this? Resource Management is common to all systems. In the OS, this includes:

- Dynamic Memory Demands

- Multiplexing Primary Memory (via Paging)

- Multiplexing the CPU (via Scheduling)

- Concurrent Access

Selecting a Page

How do we select a page frame to evict from primary memory?

There are a number of algorithms to do this.

Naive Approach

Approach: Select Random Page

- Pros: Simple to implement, understand

- Cons: May select a heavily used frame

This is functionally useless, and gives the worst-case algorithm.

Optimal Approach

Select frame that is used furthest in the future

- Pros: Simple, most efficient algorithm possible

- Cons: /dev/crystalball is not currently implemented, making future prediction challenging

This gives us the best-case algorithm- can we approximate it with our physical limitations?

Simple Approach (FIFO)

FIFO - First In, First Out.

Select the frame with the oldest contents.

- Pros: Simple to implement, understand

- Cons: May evict a page that is in use

The problem here is that FIFO has poor knowledge of the page frame content's semantics. Can we improve it?

Second Chance FIFO

Evict as in FIFO, but check if the page is used before eviction and put it back to the end of the list if it is

- Pros: Much better than FIFO

- Cons: Worst case same as FIFO. Shuffles the pages in the list.

Note, this is mostly equiv. to clock, but clock doesn't shuffle pages.

We require hardware support for this algorithm (namely the 'accessed' bit).

Least Recently Used

Evict the frame that has not been used in a while, by keeping a timer on all pages indicating their last use.

- Pros: Close to optimal semantics

- Cons: Expensive implementation, hardware requirements

Can we avoid the need for updating the linked list, or for special hardware?

Simulate LRU with NFU and Aging

NFU - Not Frequently Used

Keep a small counter per frame. Shift right to age the counter, and add R to MSB on access.

- Pros: Efficient approximation of LRU

- Cons: No strict order/tiebreak, small horizon of age

We don't have very many bits for a counter, so this doesn't give us a long window of time- but still, better than FIFO.

The algorithm is described well on Wikipedia [1].

As an aside, we can also use NRU (Not Recently Used) which keeps track of the Ref/Modified bits.

Key Idea, Recap

The problem of selecting a page to evict is a hard one. Finite state and limited resources prevent us from the ideal solutions, and we can't predict future page usage from past data to get the optimal solution.

Week 7

Oct 20: User-Level Memory Management

Key Question:

- What is the procedure to efficiently allocate dynamic chunks of memory for userland programs?

- Is there a semantic difference between malloc(small size) and malloc(big size)? If so, why?

malloc(3) and brk(2)

When you call malloc(3), what (may, but not always) happen is that the program needs to request some more memory from the kernel. This is done by the brk(2) call.

Below is a snippet from the brk(2) manpage.

BRK(2) Linux Programmer's Manual BRK(2)

NAME

brk, sbrk - change data segment size

DESCRIPTION

brk() and sbrk() change the location of the program break, which defines the end of the process's data segment (i.e., the program break is

the first location after the end of the uninitialized data segment). Increasing the program break has the effect of allocating memory to

the process; decreasing the break deallocates memory.

As we can see, this is a fairly simple way to ask for more memory. But this isn't as easy as it seems, because the memory is dynamic- we are constantly requesting memory and returning it to the kernel with free(3).

Fragmentation

Consider the following example of where a problem could arise:

Suppose we have 100 units of memory, labelled 0-49. [-----] We ask for 10 units, and are given 0-9. [#----] We ask for 30 units, and are given 10-39. [####-] We give back the 10 units from 0-9. [-###-] Now we have a _fragmented data segment_, where there are 20 units available, but they're not contiguous.

This algorithm is called the first fit- just fill the first empty block we have. Another way to do this is the best fit, which looks for the smallest block we have. As it happens, these are basically equivalent in their failure to deal with fragmentation.

There are two kinds of fragmentation- External (fragmentation in the physical address space, lots of small useless pieces) or Internal (fragmentation in the virtual address space, lots of small useless pieces). These algorithms solve neither issue.

A better approach

We can easily do away with external fragmentation by allocating memory only in fixed size pieces. In particular, memory is only allocated to a PAS in page-aligned chunks. This way, there are no unusable gaps between allocated regions of physical memory. (This also lends itself nicely to identifying which process is using each page).

glibc's implementation of malloc(3) does just this. However, this only deals with external fragmentation- internal fragmentation is trickier.

The observation was made that we should use a similar strategy for the virtual address space, and as it turns out this is exactly what glibc does. In particular, it defines 'chunks' of memory that contain 4-byte objects, chunks that contain 8-byte objects, etc. By doing this, we can minimize the gaps in allocated memory. Since most data will fall nicely into one of these buckets, this strategy works fairly well.

Each chunk has two pieces of metadata at the start of it- its size (ie how many objects are allocated in it, not the total size it has available) and the previous chunk's size. This basically forms a doubly linked list of chunks.

This is also seen in the Buddy Algorithm in the kernel, which solves a similar issue.

Big malloc(3)'s, Small malloc(3)'s

What happens if you just want a small amount of memory? Generally, brk(2) won't even be necessary. The next available chunk entry that fits the new object can just be returned.

If you want a big piece of memory (say, more than a page), then brk(2) will certainly be called, and it will just get enough pages from the kernel to satisfy the request (if it can).

Final Note- Contiguous Memory

As a small side note, all of this memory _must_ be contiguous. If the user has a pointer to a sequence of bytes, and in the middle of those bytes is some other object, there is no way to 'skip' over the object. Memory must be given in contiguous sections.

Oct 22: User-Level Memory Management, Pt. 2

Starting Exercise - Simulating MM Algorithms

We started with an exercise (that was noted to be a probable question on an exam) to simulate a memory allocation algorithm on some sequence of requests. Below is an example given in class, with 4 different types of algorithm.

Initial State:

+----+------+---+--+----------+----+--+--+

|####| |###| |##########| |##| |

+----+------+---+--+----------+----+--+--+

0 10 30 45 50 80 90 95 100

{B,W,N,F}F = {Best, Worst, Next, First} Fit

Bytes Req. | BF | WF | NF | FF |

2 |45-47 |10-12 |10-12 |10-12 |

10 |80-90 |12-22 |12-22 |12-22 |

3 |47-50 |80-83 |22-25 |22-25 |

8 |10-18 |22-30 |80-88 |80-88 |

1 |95-96 |83-84 |88-89 |25-26 |

11 |18-27 |Fail |Fail |Fail |

----------+------+------+------+------+

Leftover |5 |16 |16 |16 |

External vs. Internal Fragmentation

What is the difference between the two?

- External fragmentation arises when we have many processes being dynamically allocated memory from a single physical address space. We may end up with small non-contiguous pieces that no process can use, since the processes all need arbitrary amounts of bytes.

- Solution- Paging. Only give memory in fixed size (4KB/8KB) chunks. Use page resolution to avoid needing to have all the pages for a process contiguous; they are virtually contiguous but can be all over the physical address space.

- Internal fragmentation is the same idea, but within each of these pages.

- Never completely solved, but we can a decent solution with glibc's algorithm (ref. to Oct 20)

- External fragmentation arises when we have many processes being dynamically allocated memory from a single physical address space. We may end up with small non-contiguous pieces that no process can use, since the processes all need arbitrary amounts of bytes.

Memory Management Terms

What is the difference between the following?

- malloc - User level. Return a pointer to a region in the heap at least as long as was requested.

- calloc - User level. Same as malloc, but guarantees that the (requested number of) bytes will be set to some value.

- realloc - User level. Given a previous allocation, change the size of the allocation. We might have to move the pointer.

- free - User level. Given a previous allocation, 'recycle' it by putting it back into the free list. Does not delete the actual data!

- mmap - System Call. Generic call to allocate/deallocate regions, or map in memory contents to a region (shared libraries, etc)

- brk - System Call. Increase or Decrease the program break (ie, modify the heap size)

Metadata in an allocation

How does the free(3) call know how long the entry was? The answer is that glibc does some book-keeping on every allocation, by using a few bytes before and after the requested data.

The best place for more information on these chunks and their semantics is the Phracked article Once upon a free() which details this metadata (and how to pwn it). In brief,

+-------+ ---+ |prev_sz| | +-------+ | | sz | | One 'Chunk' (Alloc) +-------+ | | data | | : : | +-------+ ---+ |prev_sz| : :

The bytes allocated are increased by 8, and then padded up to the next 8-byte aligned value. Ie, malloc(0) gives us 8 bytes, malloc(7) gives us 16 bytes.

The size entry has some special semantics- since everything is always word-aligned, the lowest order byte will contain some flags- whether the previous chunk is in use, and whether the region is mmap'd.

Oct 24: Kernel Memory Management

While User-Level memory management is 'solved' with paging and efficient chunk allocation, there's still the issue of kernel memory allocation. The problem here is that the kernel can't offload the work to anybody else- it is responsible for all physical memory management.

There are three key steps to kernel memory management:

- Zone Allocator

- Buddy System

- SLAB/SLOB/SLUB Allocator

And most memory requests are initiated by a call to `kmalloc(size, flags)`. This looks similar to `malloc` but the 'flags' field also contains some other metadata (in particular we can ask the allocator to allow or disallow things like IO, sleeping, FS operations, etc). A typical value is `GFP_KERNEL` which allows blocking, IO, and FS operations.

Zone Allocators

The first step in finding some free physical page fraomes for the kernel is the Zone Allocator. Zones are regions in physical memory that are defined by the hardware. For example, on x86:

- ZONE_DMA : Direct I/O memory. Lowest 16MB

- ZONE_NORMAL: 16MB - 896MB

- ZONE_HIGHMEM: 896MB+

Each of these zones represents a contiguous region of physical page frames with similar semantics. This helps to group together data. Each of the zones needs to have its own internal allocation, which is satisfied by the Buddy System.

The Buddy System

It's vital to avoid external fragmentation in memory allocation- Ie, we need to keep large regions of contiguous bytes for satisfying requests with. This is solved by paging for virtual addresses (since they get mapped from all over the place into a 'virtually' contiguous area), but it's not without issues.

- Some memory _needs_ contiguous physical memory (ie, DMA)

- We want to avoid page table manipulation since it's expensive (and avoid TLB flush)

- We want to support big (4MB) pages

The solution is to group together 'free lists' of same-sized blocks.

There are 11 lists that are maintained (per zone) for this purpose. The first list has elements that are 2^0 = 1 contiguous pages (ie, these are floater pages with no buddy). The next has 2^1 = 2 contiguous pages per element (pairs of free pages), and so on. The last list keeps 2^10 = 1024 contiguous pages per element. We can move chunks to and from these regions as needed by breaking them up or combining them.

By grouping together free page chunks like this, we can quickly and easily find large enough regions of contiguous page frames to satisfy requests of all sizes.

SLAB Allocator

The last step is to actually give the page frames. The key observation here is that different data types may affect how a memory region is used, so we should try to group like data.

Furthermore, the kernel tends to "burst" some memory request types, and so we should keep a list for these types to quickly satisfy many requests.

The key idea is to keep memory in a few different slabs, based on its semantics and frequency of request. TThree slab lists are maintained- full slabs (which have no objects left to give), partial slabs (which have some left to give), and empty slabs (self explanatory).

Refer to https://www.kernel.org/doc/gorman/html/understand/understand011.html for an explanation of slabs in detail.

Week 8

Oct 27: System Startup

The focus of this lecture (and a few subsequent lectures) is how we can take a machine that is designed to do sequential computation, and use it for 'concurrent' computation? This starts right from the bootup, where the transfer is made.

Why is System Startup Interesting?

System Startup is a topic that bridges together a lot of the concepts that we already know.

- Interesting technical challenges associated with bootstrapping a running OS

- Deals with interrupts (setting up the IDT), concurrency, scheduling, and processes.

- This is where most of the setup for the data structures we have seen (IDT, GDT, page tables/directories, task list, etc) is done.

The cental job of system startup is to get a stable OS running on the hardware. From there, the OS can manage the rest of the complex tasks, but until it's in memory and running we don't get any help.

Brief Overview

There are generally three steps to get the hardware from an unknown state into some well-defined state that a kernel can execute in.

- 1) Initial startup program in ROM

- Reset the hardware

- Load the BIOS (Basic I/O System)

- 2) BIOS Program

- Initialize Devices

- Transfer control to the bootloader

- 3) Bootloader (GRUB, LILO)

- Choose the OS and boot options

- Transfer control to the OS load routine

Setting up the Execution Environment

The job of the kernel load routine is to set up the data structures and the environment for the kernel to begin its life. But wait- isn't the load routine in the kernel? How can it load the kernel?

As it turns out, the load routine is a little piece of uncompressed code whose job (among other things) is to decompress the rest of the kernel image and start running it.

From a high level, the following must be completed before the OS can be 'live'.

- CODE: Load the kernel into memory (decompress it)

- DATA: Initialize its data structures

- COMPUTATION: Create some initial tasks

- SCHEDULING/MULTIPROCESSING: Transfer control to/from/between these tasks to do useful things

We dove into the actual implementation of these startup routines in class, but rather than give a trace of this, I think it's more useful to peek through LXR for yourself.

- http://lxr.cpsc.ucalgary.ca/lxr/#linux/arch/x86/boot/header.S#L241 is where everything starts.

- http://lxr.cpsc.ucalgary.ca/lxr/#linux/arch/x86/boot/main.c#L128 is the next step, the 'main' routine which sets up some hardware and then transfers from real mode to protected mode

- http://lxr.cpsc.ucalgary.ca/lxr/#linux/init/main.c#L517 is the start_kernel() function which does a lot of the heavy lifting

- http://lxr.cpsc.ucalgary.ca/lxr/#linux/init/main.c#L416 is where the rest of the work is done (including getting the first task going and starting scheduling)

Oct 29: Process Scheduling Concepts and Algorithms

Scheduling is one of the most important jobs of a modern Operating System.

- How can we multiplex an extremely important resource (namely, the CPU)?

- How do we decide who to give the CPU to?

- How often should the kernel ask this? How do we balance the overhead of the context switch with the need for many processes to get time?

- How do we pick the next candidate?

As was explained earlier in the course, the role of scheduling is to provide the illusion of concurrent execution on an inherently sequential machine.

Quick note- process list and run queue are not the same thing! The process list is every task, regardless of whether they need CPU time or not. The run queue is a subset of the list that is just for tasks that need some CPU time.

Scheduling Considerations

An important distinction is made between policy and mechanism. A mechanism's job is to implement and enforce a policy, and a policy is an abstract specification of what should be done under given conditions. In an ideal world, many policies could be implemented on a similar code base with only minor modifications needing to be made. Good code practice is to separate the mechanism and policy when possible.

Policy Considerations

- Priority- scheduling is fundamentally a prioritization problem.

- Fairness- make sure that every process gets a slice (maybe not the same slice, but a slice nonetheless)

- Efficiency- we should spend time doing useful work, and not scheduling the work.

- Progress- programs need to make progress by getting CPU time. The scheduler should enable this, not hinder it.

- Quantum- what is the base amount of time to give the CPU to a process?

- Preemption- should we let the OS jump in?

Scheduler Metrics

- Time

- Work Type

- Responsiveness (delay)

- Consistency (delay invariance)

Preemption